Neutral Networks of Real-World Programs and their Application to Automated Software Evolution

The existing software development ecosystem is the product of evolutionary forces, and consequently real-world software is amenable to improvement through automated evolutionary techniques. This dissertation presents empirical evidence that software is inherently robust to small randomized program transformations, or mutations. Simple and general mutation operations are demonstrated that can be applied to software source code, compiled assembler code, or directly to binary executables. These mutations often generate variants of working programs that differ significantly from the original, yet remain fully functional. Applying successive mutations to the same software program uncovers large neutral networks of fully functional variants of real-world software projects.

These properties of mutational robustness and the corresponding neutral networks have been studied extensively in biology and are believed to be related to the capacity for unsupervised evolution and adaptation. As in biological systems, mutational robustness and neutral networks in software systems enable automated evolution.

The dissertation presents several applications that leverage software neutral networks to automate common software development and maintenance tasks. Neutral networks are explored to generate diverse implementations of software for improving runtime security and for proactively repairing latent bugs. Next, a technique is introduced for automatically repairing bugs in the assembler and executables compiled from off-the-shelf software. As demonstration, a proprietary executable is manipulated to patch security vulnerabilities without access to source code or any aid from the software vendor. Finally, software neutral networks are leveraged to optimize complex nonfunctional runtime properties. This optimization technique is used to reduce the energy consumption of the popular PARSEC benchmark applications by 20% as compared to the best available public domain compiler optimizations.

The applications presented herein apply evolutionary computation techniques to existing software using common software engineering tools. By enabling evolutionary techniques within the existing software development toolchain, this work is more likely to be of practical benefit to the developers and maintainers of real-world software systems.

To my daughter Louisa Jaye, my own personal variant.

First and foremost, I would like to thank my advisor Stephanie Forrest. It has been a pleasure to work with someone whose intuition about what is important and interesting align so well with my own. Dr. Forrest’s insightful questions led to many of the most fruitful branches of this dissertation.

I am lucky to have worked so closely with Westley Weimer and to have benefited from his dedication to education, technical and domain expertise, and willingness to help with all aspects of graduate school, from questions of collaboration and presentation to the practical aspects of finding a job after graduation. Many thanks to Jed Crandall and Melanie Moses for consistently providing advice and guidance, and for serving on my dissertation committee.

Many generous researchers contributed to this dissertation through review and discussion of the ideas and experiments presented herein. I would especially like to thank the following. Mark Harman for discussion of fitness functions and search based software engineering. Jeff Offutt for generously meeting with me to discuss mutation testing. Andreas Wagner, Lauren Ancel Meyers, Peter Schuster and Joanna Masel for providing a biological perspective of the ideas and results presented herein. William B. Langdon and Lee Specter for reviewing mutational robustness from the point of view of evolutionary computation. Benoit Baudry for discussion of neutral variants of software.

In addition I’d like to thank my colleagues in the Adaptive Computation Lab: George Bezerra, Ben Edwards, Stephen Harding, Drew Levin, Vu Nguyen and George Stelle. It was a pleasure discussing, and often disagreeing, with you about natural and computational systems. Your ideas and critiques helped to flesh out many of the better ideas in this work and to round off many of its rougher edges.

Finally, this work would not have been possible without the inexhaustible support of my partner Christine. Thank you most of all.

Table of Contents

- 1. Introduction

- 2. Background and Literature Review

- 3. Software Representation, Mutation, and Neutral Networks

- 4. Application: Program Diversity

- 5. Application: Assembler- and Binary-Level Program Repair

- 6. Application: Patching Closed Source Executables

- 7. Application: Optimizing Nonfunctional Program Properties

- 8. Future Directions and Conclusion

- 9. Software Tools

- 10. Data Sets

1 Introduction

We present empirical studies of the effects of small randomized transformations, or mutations, on a variety of real-world software. We find that software functionality is inherently robust to mutation. By successively applying mutations we explore large neutral networks of fully functional variants of existing software projects. We leverage software mutational robustness and the resulting neutral networks to improve software through evolutionary processes of stochastic modification and fitness evaluation that mimic natural selection. These methods are implemented using familiar tools from existing software development toolchains, and yield several techniques for software maintenance and improvement which are practical, widely applicable, and easily integrated into existing software development environments.

We demonstrate multiple applications of evolutionary techniques to the improvement of real-world software, including automated techniques to repair bugs in off-the-shelf software and patch exploits in closed source binaries, techniques to generate diverse implementations of a software specification, and methods to optimize complex runtime properties of software. By combining the techniques of software engineering and evolutionary computation this dissertation advances a shared research objective of both fields: automating software development.

We propose that the existing software development ecosystem is the product of evolutionary forces, and is thus amenable to improvement through automated evolutionary techniques. We present empirical evidence of mutational robustness and neutral networks in software—both of which are hallmarks of evolution previously only studied in biological systems. We demonstrate multiple applications of evolutionary techniques for software improvement. The remainder of this section motivates the work, highlights the main empirical results and practical applications, outlines the organization of the remainder of the document, and provides instructions for reproducing the experiments presented herein.

Motivation: Over the last fifty years the production and maintenance of software has emerged as an important global industry, consuming the efforts of 1.3 million software developers in the United States alone in 2008 [26]. This is projected to increase by 21%, or to over 1.5 million by 2018 [26]. As it stands now, software development is difficult, expensive, and error prone. Software bugs cost as much as $312 billion per year [21], patching a single security vulnerability can cost millions of dollars [175], and while data centers are estimated to have consumed over 1% of total global electricity usage in 2010 [100], no existing mainstream compilers offer optimizations designed specifically to reduce energy consumption.

Empirical Results: We find that existing software projects, when manipulated using common software engineering tools, exhibit properties such as mutational robustness and large neutral networks which have previously been studied only in biological systems. In such biological systems mutational robustness and neutral networks have been shown to enable evolutionary processes to function effectively. Our results suggest that the same is true of software systems.

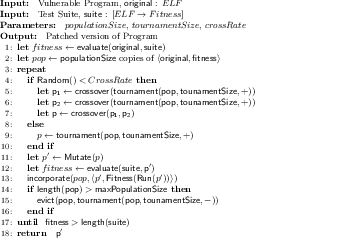

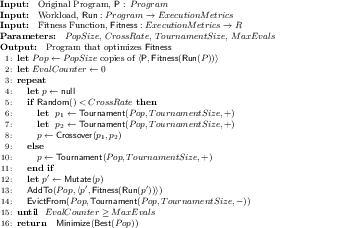

Applications Evolutionary computation improves the fitness, or performance, of a population of candidate solutions through an iterative cycle of random modification, evaluation, and selection in a process greatly resembling natural selection [69,70]. Evolutionary computation techniques typically begin with an initial population of randomly generated individuals. The methods of representation and the transformation operators of candidate solutions are often domain-specific and selected for the particular task at hand [147].

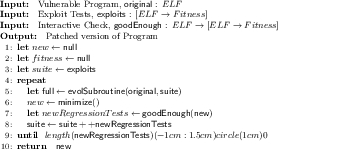

Figure 1: Improving real-world software by applying evolutionary computation techniques which are implemented using software engineering tooling.

This work improves an existing instance of software that is already close to optimal.1 Instead of using domain-specific representations and transformations (as in most prior work in genetic programming), existing software engineering tools are used to ensure applicability to the wide range of complex software used in the real world as well as interoperability with existing software development environments. Building on prior work using genetic programming to repair bugs in existing software [185,109], we adopt evolutionary computation techniques to support the software engineering applications presented in later chapters.

Organization We present empirical results suggesting that today’s software engineering toolchain supports the operations required by evolutionary computation techniques (Chapter 3; including previously unpublished work and work published in GPEM [164]), and more specifically, the program representation, transformation operators, and evaluation functions (Sections 3.1 and 3.2; including previously unpublished work and work published in ASE 2010 [163]). We show that software systems have high mutational robustness and large neutral networks, which makes them amenable to improvement through evolutionary processes (Section 3.3).

We next apply evolutionary techniques to a number of software engineering tasks such as repairing bugs in off-the-shelf software (Chapter 5; published in ASPLOS 2013 [161]), patching vulnerabilities in a closed-source binary (Chapter 6; previously unpublished), generating diverse implementations of a specification (Chapter 4; published in GPEM [164]), and reducing the energy consumption of popular benchmark programs (Chapter 7; published in ASPLOS 2014 [162]). We conclude with a discussion of the outstanding challenges standing in the way of wider adoption of these techniques, possible steps to overcome these challenges, and a summary of the impact of this work to date (Section 8).

We review the relevant literature (Chapter 2) and define the technical terms used in this work (Glossary).

Reproducibility The tools, data, and instructions required to reproduce the experimental results presented in this work are provided. Appendix 9 describes the software developed for this research and includes pointers to supporting libraries and the implementations of applications. Appendix 10 describes the data sets used in the experiments, including multiple suites of benchmark programs. In some cases the analysis that produced Figures and Tables is preserved in Org-mode files [45], to support automated reproducibility [159], and links to these files are provided as footnotes.

2 Background and Literature Review

This research builds upon previous studies of biological and computational systems. The study of robustness and evolvability in biology provides the concepts and terminology with which we investigate the same principles in computational systems.

Moving from the biological to the computational, this chapter reviews the most relevant results from prior work in biology (Section 2.1), evolutionary computation (Section 2.2), and software engineering (Section 2.3). The final section of this chapter focuses on the immediate precursor work; the use of evolutionary techniques to automatically repair defects in software source code (Section 2.4).

2.1 Robustness and Evolvability in Biology

The ability of living systems to maintain functionality across a wide range of environments and to adapt to new environments is unmatched by man-made systems. The relationship between robustness and evolvability in living systems has been studied extensively. This section reviews this field of study, highlighting the elements most relevant to this dissertation.

Living systems consist of a genotype and a phenotype. The genotype is the heritable information which specifies, or gives rise to the organism. The resulting organism and its behavior, or interaction with the world, is the phenotype.

Both the genetic and phenomenal components have associated types of robustness. Genetic robustness, or mutational robustness, is the ability of a genotype to consistently produce the same phenotype despite perturbations to its genetic material. Genetic robustness can be achieved in different ways and on different levels. At the lowest level, important amino acids are over-represented in the space of possible codon encodings of triplets of base pairs, making random changes to encoding more likely to produce useful amino acids. Further, functionally similar amino acids have similar encodings. As a result of these properties, many small mutations in amino acid codings are likely to encode identical or similar amino acids [98], making the organism more robust to mutations affecting amino acid encodings. At higher levels, vital functions are often degenerate, meaning that they are implemented by diverse partially redundant systems [47]. For example, in the nervous system no two neurons are equivalent, but no single neuron is necessary, resulting in a system whose functionality is robust to small changes. Degenerate systems may be more evolvable than systems which achieve robustness through mere redundancy [52,187]. Finally, many mechanisms have evolved that buffer environmental changes, e.g., metabolic pathways whose outputs are stable over a wide range of inputs [180].

Environmental robustness is the ability of a phenotype to maintain functionality despite environmental perturbations. Many of the biological mechanisms responsible for environmental robustness improve the overall robustness of the organism and as a result contribute to mutational robustness [113]. There is a strong correlation between genetic and environmental robustness [94].

Mutational robustness appears to be an evolved feature because evolution tends to increase the mutational robustness of important biological components [33,189]. Although it is unlikely that mutational robustness is explicitly favored by natural selection as a protection against mutation (due to the low mutation rates in most populations), it seems likely that it arose as a side-effect of evolution to enhance environmental robustness [176].

In evolutionary biology fitness is the measurement of an organism’s ability to survive and reproduce. Fitness landscapes are used to visualize the fitness of related genotypes [190]. Typically one dimension of a fitness landscape encodes an organism’s fitness as a scalar, and all other dimensions are used to represent genotypes. This space of genotypes may be a high-dimensional discrete space in which each point is a genotype and the immediate neighbors of each point are the genotypes that are reachable by application of a single mutation to the original point.

A mutationally robust organism has many genotypes that map to phenotypes with the same fitness [92]. Regions of the space consisting of genotypes with identical fitness are called neutral spaces [91], or neutral networks, and are depicted in Figure 1.

Figure 1: Neutral spaces are subsets of an organism’s fitness landscapes in which every organism has identical fitness.

The relationship between mutational robustness and neutral networks and evolvability is complex [180,179,46]. Mutational robustness can be explicitly selected for in static environments and selected against in dynamic environments [189,126]. Periods of time without selection can increase mutational robustness [176], and periods of strong directional selection can reduce mutational robustness [66].

Mutational robustness directly inhibits evolution by reducing the likelihood that any given genetic modification will have a phenotypic effect. This inhibitory effect can dominate at the small time scales of individual mutations. In some cases overly large neutral networks can actually reduce evolvability [5]. However, large neutral networks predominately promote evolvability. Populations tend to spread out across neutral networks via a process called drift, accruing genetic diversity and novel genetic material [32,166,19,114]. This accrued genetic material is believed to play an important role in evolutionary innovation, and provides the genetic fodder required for large evolutionary advances [178,122,141,144,3].

2.2 Evolutionary Computation

This section describes research into the application of evolutionary techniques in computational systems. We describe the fields of digital evolution and evolutionary algorithms. In digital evolution computational systems are used to perform experiments not yet feasible in biological systems. In evolutionary algorithms engineered systems are optimized using algorithms which mimic the biological process of natural selection.

2.2.1 Digital evolution

Some of the research into evolutionary biology discussed in Section 2.1 rely on experiments not based on direct observation of biological systems, but rather on computational models of evolving systems. The evolutionary time frames and the degree of environmental controls required for such experiments are not yet achievable in experiments using biological systems. In digital evolution, much more control and measurement is possible via computational models of evolving populations. These models represent genotypes using specialized assembly languages in environments in which their execution (phenotype) determines their reproductive success [142].

In addition to modeling biological evolution, work in digital evolution has generated hypotheses about those properties of programming languages that might encourage evolvability [140]. Although the languages, or chemistries, studied in these computational environments are far from traditional programming languages, some predictions do transfer, such as the brittleness of absolute versus symbolic addressing to reference locations in an assembler genome[140]. Work in the computer virus community has produced similar research on the evolvability of traditional x86 assembly code [76]. One of the contributions of this dissertation is to support some of these claims and intuitions while disproving others. For example, Section 3.3.2.5 confirms the brittleness of absolute addressing in ELF files, while in Chapter 5 we find that x86 ASM may be effectively evolved, contrary the conclusions of the computer virus community.

2.2.2 Evolutionary Algorithms

Evolutionary algorithms, including both the genetic algorithms and genetic programming sub-fields, predate the work on digital evolution.

Genetic algorithms are techniques which apply the Darwinian view of natural selection [40] to engineering optimization problems [69,129]. Genetic algorithms require a fitness function which the algorithm seeks to maximize. A population of candidate solutions, often represented as a vector, is randomly generated, and then maintained and manipulated through transformations operations typically including mutation and crossover.2 The algorithm proceeds in a cycle of fitness based selection, transformation, and evaluation, which is usually organized into generations, until a satisfactory solution is found or a runtime budget is exhausted.

Steady state genetic algorithms such as those used in Chapters 6 and 7 are not organized into discrete generations, rather steady state genetic algorithms apply the selection, transformation and evaluation cycle to single individuals [119]. New individuals are immediately inserted into the population and when the size of the population exceeds the maximum allowed population size individuals are selected for eviction. The use of a steady state evolutionary algorithm simplifies the implementation of genetic algorithms by removing the need for explicit handling of generations. Steady state algorithms also reduce the maximum memory overhead to almost 50% of generational techniques because of the interleaved individual creation and eviction. Of most relevance to this work, steady state algorithms are more exploitative than generational genetic algorithms and are thus more appropriate in situations which begin with a highly fit solution, such as in this work which uses evolutionary techniques to improve existing software.

Genetic programming is a specialization of genetic algorithms in which the candidate solutions are programs, often represented as ASTs [70,101], and have been applied to a number of real-world problems [147]. Genetic programming languages are often much simpler than those used by human programmers and rarely resemble regular programming languages, although in some cases machine code has been evolved directly [104]. Recently genetic programming techniques have been applied to the repair of extant real-world programs [185]. This application is discussed at greater length in Section 2.4.

Genetic algorithms and genetic programming differ both in the way candidate solutions are represented (by using vectors and trees respectively [147]) and in the application to programs in the case of genetic programming. The work presented in this dissertation applies to existing programs as does genetic programming. However, vector representations are often used, as they are in genetic algorithms. so the blanket term “evolutionary computation” is used in this dissertation to avoid confusion.

The performance of evolutionary algorithms are highly dependent upon the properties of the fitness landscape as defined by their fitness function. NK-studies have been used to access the effectiveness of these techniques over tunable fitness landscapes [11]. In these studies the values N and K may be tuned to control the ruggedness of the landscape being searched. N controls the dimensionality of the space, and K controls the epistasis (the degree of interaction between parts) of the space. Chapter 7 in this work explores the use of smoother fitness functions than those used in prior chapters. Further discussion of fitness functions are given in Sections 3.6.4 and 8.2.2.

Prior work in genetic programming has leveraged vector program representations applied to machine code. The limited amount of previous work in this field falls into two categories. The first category guarantees that the code-modification operators can produce only valid programs, often through complex processes incorporating domain-specific knowledge of the properties of the machine code being manipulated [135,143]. In the second approach, the genetic operators are completely general and the task of determining program validity is relegated to the compiler and execution engine [104]. This dissertation takes the latter approach, and we find that both compiler IRs and ASM are surprisingly robust to naïve modifications (Section 3.3.2.5).

2.3 Software Engineering

This dissertation contributes to the larger trend in software engineering of emphasizing acceptable performance over formal correctness. We review recent work in this vein and highlight tools and methods of particular interest.

Approximate computation encompasses a variety of techniques that seek to explore the trade-off of reduced accuracy in computation for increased efficiency. The main motivation behind this work is the insight that existing computational systems often provide much more accuracy and reliability than is strictly required from the level of hardware up through user-visible results. Examples of promising approximate computation techniques include neural accelerators for efficiently executing delineated portions of software applications [51], and languages for the construction of reliable programs over unreliable hardware [28,20].

In failure oblivious computing [151], common memory errors such as out-of-bounds reads and writes are ignored or handled in ways that are often sufficient to continue operating but not guaranteed to preserve program semantics. For example, a memory read of a position beyond the end of available memory can be handled in a number of different ways. The requested address can be “wrapped” modulo the largest valid memory address. Memory can be represented as a hash table in which addresses are merely keys and new entries are created when needed. In this case reads of uninitialized hash entries can simply return random values. By preventing common errors such as buffer overruns these techniques have been shown to increase the security and reliability of some software systems. Failure oblivious computing assumes that in many cases security and reliability are more important than guaranteed semantics preservation [149,152,117,150].

Beal and Sussman take a similar approach proposing a system for increasing the robustness of software by pre-processing program inputs [15]. Under the assumption that most software operates on only a sparse subset of the possible inputs, they propose a system for replacing aberrant or unexpected inputs with fabricated inputs remembered from previous normal operation. This system of input “hallucination”, is shown to improve the robustness of a simple character recognition system.

While the previous system learns and enforces invariants on program input, the clearview system [146] learns invariants from trace data extracted from a running binary using Daikon [50]. When these invariants are violated by an exploit of a vulnerability in the original program the system automatically applies an invariant-preserving patch to the running binary, which ensures continued execution. ClearView was evaluated against a hostile red-team and was able to successfully repair seven out of ten of the red team’s attacks [146]. Despite these impressive results the ClearView system has a number of limitations. The tool used to collect invariants (Daikon) is not exhaustive (e.g., missing polynomial and array invariants [134]), is only able to detect a limited set of errors, is only able to repair a pre-configured set of errors for which hand written templates exist, and is not guaranteed to preserve correct program behavior.

The approaches mentioned above are applied to executing software systems. There are also techniques that apply to the pre-compiled software source-code, or genotype. One family of such techniques includes loop-perforation [128] and dynamic knobs [68]. In loop-perforation, software is compiled to a simple IR, looping constructs are found in this IR and then modified to execute the loop fewer times by skipping some loop executions. This technique can be used to reduce energy and runtime costs of software while maintaining probabilistic bounds of expected correctness. This work is notable for introducing program transformations that are not formally semantics preserving but are rather predictably probabilistically accurate. The impact of these techniques is limited to specific manually specified program transformations, a more general method of program optimization, of which loop perforation may be a special case, is given Chapter 7.

There is a common misconception that software is brittle and that the smallest changes in working code can lead to catastrophic changes in behavior. This perceived fragility is codified in mutation testing systems. Such systems measure program test suite coverage by the percentage of random program changes that cause the test suite to fail, and operate under the assumption that random changes to working programs result in breakage [116,43,72,81]. This usage presumes that all program mutants are either buggy or equivalent to the original program.

The detection of equivalent programs is a significant open problem for the mutation testing community (cf. equivalent mutant problem [81]) [63]. Although the detection of equivalent mutants is undecidable [25], a number of tools have been proposed for automatically finding equivalent mutants [136,139,155]. By contrast, the techniques presented herein exploit neutral and beneficial variation in program mutants for use in software development and maintenance. Recent work by Weimer et al. [184], seeks to leverage work on equivalent mutants from the mutation testing community to improve the efficiency of automated of bug repair.

2.4 Genprog: Evolutionary Program Repair

Genprog is a tool for automatically repairing defects in off-the-shelf software using an evolutionary algorithm. It does not require a formal specification, program annotations, or any special constructs or coding practices. It requires only that the program be written in C and be accompanied by a test suite [185,109].

Figure 2: Genprog automated evolutionary program repair (Le~Goues~\cite[Figure~3.2]{legoues2013automatic}). Input includes a C program, a passing regression test suite, and at least one failing test indicating a defect in the original program. The C source is parsed into an AST which is iteratively mutated and evaluated. When a variant of the original program is found which continues to pass the regression test suite and also passes the originally failing test, this variant is returned as the “repair.”

The Genprog repair process is shown in Figure 2. As input Genprog requires the C source code of the buggy software, the regression test suite which the current version of the software is able to pass, and at least one failing test indicating the bug. The source is parsed into a Cil AST [132,185], which is then duplicated and transformed using the three mutation operations (shown in Panels a-c of Figure 2) and crossover to form a population of program variants.

In an evolutionary process of program modification and evaluation, Genprog searches for a variant of the original program which is able to pass the originally failing test case, while still passing the regression test suite. This version is returned by the evolutionary search portion of the Genprog technique. As a final post-processing step the difference between the original program and the repair is minimized to the smallest set of diff hunks required to achieve the repair. This minimization is performed using delta debugging, a systematic method of minimization while retaining a desired property [192].

In a large-scale systematic study Genprog was able to fix 55 of 108 bugs taken from a number of popular open-source projects. When performed on the Amazon EC2 cloud computing infrastructure each repair cost less than $8 on average [108] which is significantly cheaper than the average cost of manual bug repair.

Genprog has had a significant impact on the software engineering research community. The project won best paper awards at ICSE in 2009, GECCO in 2009, and SBST in 2009. In addition it earned Humies awards for human-competitive results produced by evolutionary algorithms. In 2009 Genprog [185] and ClearView [146] demonstrated the applicability of automated program repair to real-world software defects, and Orlov and Sipper demonstrated a technique similar to Genprog over smaller Java programs [143]. Since then interest in the field has grown with multiple applications (e.g., AutoFix-E [182], AFix [82]) and an entire section of ICSE 2013 (e.g., SemFix and FoREnSiC [133,99], ARMOR [29], PAR [89,130]).

This dissertation is also a descendant of Genprog. The experiments described in the following chapters investigate the mechanisms underlying Genprog’s success (Chapter 3 [164]) and extend the Genprog technique into new areas such as repair in embedded systems (Chapter 5 [161]) repair of closed source binaries (Chapter 6), and optimization to reduce energy consumption (Chapter 7 [162]).

3 Software Representation, Mutation, and Neutral Networks

In biological systems both mutational robustness and the large neutral networks reachable through fitness-preserving mutation are thought to be necessary enablers of evolutionary improvement as discussed in Section 2.1. This chapter describes a series of experiments which demonstrate the prevalence of mutational robustness and large neutral networks in software using the existing development toolchain.

Mutational robustness and neutral networks arise in systems with a genetic structure which support mutation and crossover and which give rise to a phenotype that in turn supports fitness evaluation. This chapter defines multiple program representations and the mutation and crossover transformations they support (Section 3.1). These representations include high-level ASTs, compiler IR, ASM, and binary executables. Methods of expressing these representations as executable programs and evaluating their fitness are given (Section 3.2).

Software mutational robustness is present in real-world programs across multiple levels of representation, regardless of the quality of the method of fitness evaluation (Section 3.3; published in GPEM [164]). Large neutral networks are found and their properties are investigated (Section 3.4; previously unpublished work and work published in GPEM [164]). This chapter concludes with a mathematical analysis of the fitness landscape defined by these program representations and of the resultant neutral networks (Section 3.5; previously unpublished).

3.1 Representation and Transformation

3.1.1 Representations

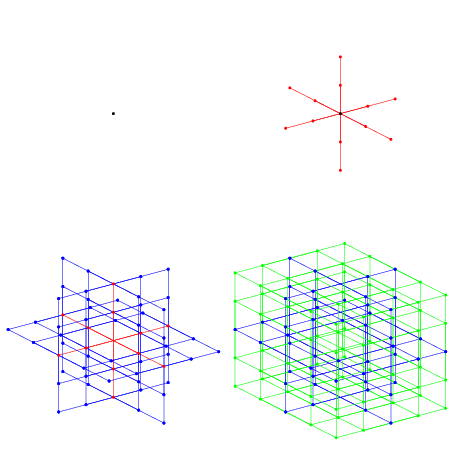

In this dissertation we view a program’s representation as its genetic information, which can be modified with mutation and crossover operations. As discussed in Section 2.1, program representations are typically hierarchical trees linear vectors. In this dissertation we study both forms. By focusing on program representations which are closely based upon structures used commonly in software engineering the implementations of many of these program representations are able to leverage existing tools.

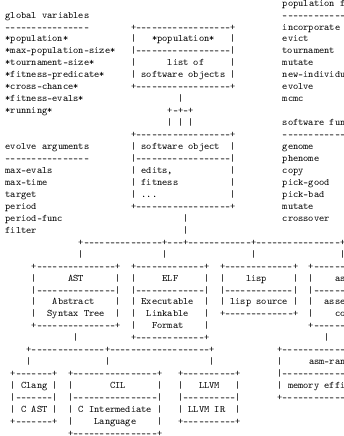

Specifically we will investigate two high-level tree program representations and three low-level vector program representations. The tree program representations include one based on Cil [109], and one based on CLang ASTs [105]. The three lower-level program representations include one based on argumented ASM code [163], one based upon LLVM IR [106], and one program representation applicable directly to binary ELF files [161]. This last representation is operationally similar to the ASM representation with additional bookkeeping required for all program transformations. The five program representations are depicted graphically in Figure 2 and are described in greater detail below.

Figure 2: Program representations. The Cil and Clang tree representations are shown in Panel (a). Panels (c) and (d) shown the ASM, LLVM, and ELF program representations. The source code shown in Panel (a) is not used as a program representation because of the lack of directly source-code level transformations (most tools for source code transformation first parse the source into an AST which is then transformed and serialized back to source).

3.1.1.1 CLang-AST

The highest level representation is based on ASTs parsed from C-family languages using the CLang tooling. This level of representation most closely matches source code written directly by human software developers. This level of representation is used in Section 3.3.2.5.

3.1.1.2 Cil-AST

The next highest level of representation is based on ASTs parsed from C source code using the Cil toolkit to parse, manipulate and finally serialize C source ASTs back to C source code [132,185].

Cil simplifies some C source constructs to facilitate programmatic manipulation. Despite these simplifications, the Cil AST representation more closely resembles a high-level source code than a true compiler IR. This level of representation is used in Sections 3.3 and 3.4 and in Chapters 4 and 5.

3.1.1.3 LLVM

The LLVM representation operates over the LLVM compiler IR, which is written in SSA form [106]. LLVM supports multiple language front-ends making it applicable to a wide range of software projects.

A rich suite of tools is emerging around the LLVM infrastructure.3 This representation benefits from these tools because they can be easily applied to LLVM program representations to implement fitness evaluation or program transformation. This level of representation is used in Section 3.3.2.5.

3.1.1.4 ASM

Any compiled language is amenable to modification at the ASM

level. This level represents programs as a vector of assembler

instructions. Some compilers support this translation directly, e.g.,

the -S flag to the GCC compiler causes it to emit a string

representation of ASM instructions. For our ASM program

representation, we parse this sequence of instructions into a vector

of argumented assembly instructions. This parsing is equivalent to

splitting the output of the string emitted by GCC -S on newline

characters. This vector can be manipulated programatically (e.g., by

mutation and crossover operations) and serialized back to a

text string of assembly instructions [163,161]. The ASM program representation handles

multiple ISAs including both CISC ISAs such as x86 and

RISC ISAs such as MIPS.

This level of representation is used in Sections 3.3 and 3.4 and in Chapters 5 and 7.

3.1.1.5 ELF

The ELF file format is a common format of compiled and linked

library and executable files [174]. When the code in ELF files

is executed, it is loaded into memory and translated by the CPU into a

series of argumented assembler instructions. Using custom tooling in

combination with existing disassemblers such as objdump it is

possible to modify the sequence of assembler instructions in an

ELF file in much the same way as with the ASM program

representation [161]. This level of representation

is used in Section 3.3.2.5 and

3.4 and in Chapters 5 and

6.

3.1.2 Transformations

Every program representation used in this dissertation supports the same set of three simple mutation transformations (copy, delete and swap) and at least one crossover transformation. These transformations are taken from previous work in the genetic programming community where they have been shown to be powerful enough to evolve novel behavior [147].

All four program transformations are simple and general. They do not encode any domain knowledge specific to the program material they manipulate. They are applicable to multiple program representations (e.g., AST or ASM), and to multiple languages (e.g., x86 or ARM assembler) without modification. These transformations are plausible analogs of common biological genetic transformations, and are commonly performed by human software developers [90].

None of these transformations creates new code. Rather they remove, duplicate, or re-order elements already present in the original program. This design is based on the intuition that most extant programs already contain the code required to implement any desirable behavior related to their specification. The benefit of limiting the program transformations in this way is to limit the size of the space of potential programs (Section 3.5).

3.1.2.1 Mutation

The mutation transformations are copy, delete, and swap. Copy duplicates an AST subtree, or instruction in vector and inserts it in a random position in the AST or immediately after a randomly chosen location in the vector respectively. Delete removes a randomly chosen AST subtree or vector element. Swap exchanges two randomly chosen AST subtrees or vector elements.

Figure 2 illustrates the mutation operators. ELF mutation operations are similar to ASM mutation operations, are described in greater detail in Section 6.2.2.1, and are shown in Figure 2.

Figure 2: Mutation transformations over both tree and vector program representations.

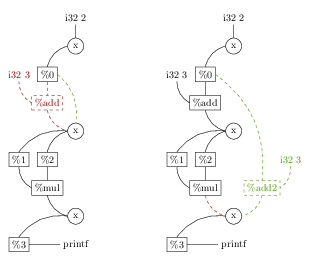

The LLVM transformations are more complex than the simple operations shown in Figure 2. The LLVM IR requires that a valid data-dependency graph be maintained by all program transformations. Thus, the LLVM mutations must explicitly patch this dependency graph, assigning inputs and outputs for all new statements and replacing the inputs and outputs of all removed statements. Figure 2 illustrates the process for the delete and copy operations. These data dependencies that we manipulate explicitly in the LLVM IR are managed implicitly at the ASM level through the re-use of processor registers.

Figure 2: Illustration of transformations over LLVM IR. These transformations require that the SSA data dependency graph be repaired after each mutation.

3.1.2.2 Crossover

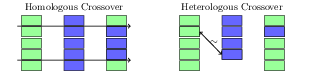

Crossover re-combines two program representations and produces two new program representations with elements from each parent in a process analogous to the biological process of the same name. In tree representations, one subtree of each parent is chosen randomly and they are swapped. In the vector representation a two-point crossover is used [41]. First, two indices which are less than the size of the smaller vector are chosen, then the contents of the vectors between these indices are swapped.

Figure 2 illustrates both the tree and vector crossover transformations.

Figure 2: Crossover transformations over tree and vector program representations.

3.1.3 Implementation Requirements

Each level of representation places different requirements on how the software can be programatically manipulated and what parts of the tool chain need to be available.

- AST

The Cil-AST and CLang-AST representations have the strictest requirements. Manipulation at the AST level requires that the source code be written in C (for Cil) or a C family language (for CLang) and be available. To express AST programs as executables the entire build toolchain of the software must be available.

- ASM and LLVM

The ASM and LLVM representations require that the assembler or LLVM IR compiled from the original program be available. To express these programs, the linking portion of a project’s build toolchain must be available. Any language whose compiler is capable of emitting and linking intermediate machine code or LLVM IR can use this representation level, making these levels more broadly applicable than the AST representations.

In some cases, re-working a complex software project’s build toolchain to emit and re-read intermediate ASM or LLVM IR is not straightforward because not all compilers support such operations directly (e.g.,

g++the C++ front end of the GCC compiler collection).- ELF

Any ELF file can be used as input for the ELF program representation. This level of representation does not require access to the source code of the original program. ELF representations can be serialized directly to disk for evaluation and do not require access to the original program’s build toolchain. For these reasons the ELF representation is helpful for modifying closed source executables for which no development access or support is available. An example application to a proprietary executable is given in Chapter 6.

3.2 Fitness Evaluation

Fitness evaluation in this context is a two-step process. The genotype must first be expressed as an executable (Section 3.2.1), and is then later run against a test suite so that the program phenotype can be evaluated (Section 3.2.2).

3.2.1 Expression

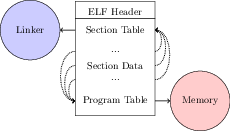

As with biological organisms, a program’s fitness is a property of its phenotype or behavior in the world. To assess the fitness of a program, its representation must first be expressed into a phenotype. Expression is shown in Figure 2 and varies by program representation as follows.

Figure 2: Expression of program representations to executables. Gray solid boxes represent traditional software engineering artifacts and red dashed boxes represent program representations. Each program representation modifies a different point in the process of compilation and linking of program code to an executable. In each case only those stages of compilation and linking which are downstream from a program representation are necessary for expression of that program representation.

- AST

The Cil and CLang AST representations are first serialized back to C-family source code. The CLang tooling produces formatted source code which is identical to the input source. This source code is then compiled and linked into an executable using the build toolchain of the original program.

Expression can fail if the compilation or linking processes fail, for example if an AST violates either type or semantic checks performed by the compiler, or references symbols that cannot be resolved by the linker. In these cases no executable is produced.

- ASM

The argumented assembler instructions constituting the ASM and LLVM genomes are serialized to a text file. This text code is then linked into an executable using the build toolchain of the original program.

Expression can fail if the linker fails. This is commonly caused by sequences of assembler instructions that are invalid or cannot be resolved by the linker. In this case no executable is produced.

- ELF

The ELF representation genome is composed of those sections of the ELF file that are loaded into memory during execution. These sections, along with the remainder of the ELF file, are serialized directly into an executable on disk. This process requires no external build tools.

Eliminating the compilation and linking steps for representations at the ASM and ELF levels increases efficiency of evaluation as compared to the other levels. This can dramatic affects the efficiency of techniques using these lower levels (cf. runtime Section 5.4).

3.2.2 Evaluation

Evaluation entails executing the program against a test suite. This execution might be evaluated for functional correctness, as in the applications presented in Chapters 4, 5 and 6, or for nonfunctional runtime properties, as in the application presented in Chapter 7.

During both functional and nonfunctional evaluation the programs that fail to express executables are assigned the worst possible fitness value, typically positive or negative infinity.

- Functional Evaluation

The goal of functional evaluation is to determine if the program behavior is correct or acceptable. This is determined by its ability to pass all of the test in the test suite. Functional evaluation does not actually determine whether the evaluated program is semantically equivalent to the original program. In many cases a fully functional program variant computes a slightly different function, which is undetectable by the test suite.

Functional evaluation is not related to, nor does it provide any guarantee with respect to, any formal program specification. In every case discussed in this dissertation (and in the vast majority of real-world software) there is no written or formal program specification. In these, cases the program’s test suite serves as an informal specification. In the cases we present, excluding the repair of proprietary software in Section 6 for which no test suite is available, the test suite distributed with the program is used unaltered.

- Nonfunctional Evaluation

nonfunctional evaluation assesses the desirability of the nonfunctional runtime properties of a program’s execution. Standard profiling tools are used to perform this evaluation. Section 7.1 presents a framework designed to optimize nonfunctional fitness functions. Section 8.2.1 describes additional software engineering tools that could be leveraged in future work to target other types of diverse fitness functions.

3.3 Software Mutational Robustness

Robustness is an important aspect of software engineering research, especially with respect to the reliability and availability of software systems. In contrast to these aforementioned types of phenotypic robustness, this section investigates a form of genotypic robustness which we call software mutational robustness. Software mutational robustness refers to the functionality of program variants, or instances of software whose genome has been randomly mutated.

Functionality is assessed using the program’s test suite as described in Section 3.2.2. We investigate the appropriateness of using program test suites to assess program functionality and find that in the majority of cases test suites serve as a useful proxy for a formal specification. We find that this result holds across a wide range of test-suite qualities—where quality is measured using statement and ASM instruction coverage. Borrowing a term from prior work in biology we call functional program variants neutral variants. Neutral variants continue to satisfy the requirements of the original program as defined by the test suite. But, they often differ from the original in minor functional properties such as the order of operations or behaviors left unspecified by the program requirements, and nonfunctional properties may differ from the original, e.g., run-time or memory consumption.

As a simple example, consider the following fragment of a recursive quick-sort implementation.

if (right > left) { // code elided ... quick(left, r); quick(l, right); }

Swapping the order of the last two statements like so,

quick(l, right); quick(left, r);

or even running the recursive steps in parallel does not change the output of the program, but it does change the program’s run-time behavior. We find that examples of such neutral changes to programs are commonly and easily discovered through automated random program mutation.

This section provides empirical measurement of software mutational robustness collected across a wide range of real-world software spanning 22 programs comprising over 150,000 lines of code and 23,151 tests. These programs are broken into three broad categories according to the properties of the test-suite. We find an average software mutational robustness of 36.8% and minimum software mutational robustness of 21.2%. We see little variance in software mutational robustness across categories or programs, and we find that the levels of mutational robustness are not explained by the quality of a program’s test suite. Given these results we surmise that software is inherently mutationally robust.

3.3.1 Experimental Design

This section defines software mutational robustness and describes the techniques used to measure the software mutational robustness of a number of benchmark programs. It will also describe the benchmark programs and their related test suites.

3.3.1.1 Software Mutational Robustness

The formal definition of software mutational robustness is given in Equation 1. It is a property of a triplet consisting of a software program \(P\), a set of mutation operations \(M\), and a test suite \(T : \mathcal{P} \rightarrow \{ \mathsf{true}, \mathsf{false} \}\). Software mutational robustness written \(\mathit{MutRB}(P,T,M)\) is the fraction of the variants \(P'=m(p)\), \(\forall m \in M\) for which \(t(P)\) (program P passes test t) is true \(\forall t \in T\). For any program P, which can not be successfully expressed as an executable \(t(P)\) is false \(\forall t \in T\), so such programs count in the denominator of \(\mathit{MutRB}(P,T,M)\) but not in the numerator.

\begin{equation} \mathit{MutRB}(P,T,M) = \frac {|\{ P' ~|~ m \in M.~ P' = m(P) ~\wedge~ T(P') = \mathsf{true} \}|} {|\{ P' ~|~ m \in M.~ P' = m(P) \}|} \end{equation}3.3.1.2 Measurement of Software Mutational Robustness

In all experiments we use the generic mutation operations described in Section 3.1.2 with equal probability. We evaluate mutational robustness using the Cil-AST and ASM program representations. All of the test suites used are those distributed with the programs \(P\), and are described in more detail along with the description of the benchmark programs in Section 3.3.1.3.

Since exhaustive evaluation of all possible first-order variants (i.e., variants resulting from the application of a single mutation to the original program) is prohibitively expensive (cf. Number of neighbors Section 3.5.2), the following technique is used to estimate the mutational robustness of each benchmark program.

The original program is run on its test suite and each AST node or ASM instruction executed by the test suite is identified. AST identifying information is collected by instrumenting each AST node to print an identifier during execution. ASM identifying information is collected by using a simple

ptrace-based utility4 to collect the values of the program counter during execution. These program counter values are converted into offsets into the program data where they identify specific argumented instructions in the ASM genome.A total of 200 unique variants are generated using each of the three mutation operations for a grand total of 600 unique program variants. Mutation operations are applied uniformly at random along the traces collected in Step 1. Mutations are limited to portions of the programs exercised by the test suite in order to avoid overestimating software mutational robustness by including changes in untested portions of the program.

At the AST level, variants are considered unique if they produce a different assembler when compiled with

gcc -O2. Non-unique variants are discarded and do not contribute to either the denominator or the numerator of the \(MutRB\) fraction to avoid overestimating mutational robustness by counting obviously equivalent mutants as neutral variants. To avoid overestimating mutational robustness, variants which fail to compile are all considered unique and are added to the denominator of the \(MutRB\) fraction.Each successfully compiled unique variant is evaluated using the program test suite. Time to execute the test suite is limited to within an order of magnitude of the time taken by the original program to complete the suite. Variants which exceed the time limit (e.g., because of infinite loops) are treated as having failed the test. Only variants that pass every test in the test suite are counted as neutral and added to the numerator of the \(MutRB\) fraction.

The fraction of unique variants that successfully compile and pass every test in the test suite within the given resource limitations are reported as the software mutational robustness.

3.3.1.3 Benchmark Programs and Test Suites

Our investigation includes 22 real-world software programs listed in Table~\ref{mutational-robustness-results}. The programs cover three groups including 14 open-source systems programs,5 four sorting programs,6 and four programs taken from the Siemens Software-artifact Infrastructure Repository.7

The systems programs were chosen to represent the low- to middle-quality test suites which are typical in software development. The test suites used to evaluate the mutational robustness of these programs are those maintained by the software developers and distributed with the programs.

The sorting programs were taken from Rosetta Code,8 and were

selected to be easily testable. We hand-wrote a single test suite to

cover all four sorting algorithms.9 The test suite covers every

branch in the AST and exercises every executable assembler

instruction in the assembler compiled from the program using gcc

-O2.

The Siemens programs were selected to represent the best attainable

test suites. These programs were created by Siemens Research

[73], and later their test suites were

extended by Rothermel and Harold until each “executable statement,

edge, and definition-use pair in the base program or its control flow

graph was exercised by at least 30 tests”

[153]. Also among the Siemens programs, the

space test suite was created by Volkolos [177]

and later enhanced by Graves [61]. The resulting

space test suite covers every edge in the control flow graph with at

least 30 tests.

3.3.2 Results

We report the rate of mutational robustness (Section 3.3.2.1), an analysis of mutational robustness by test suite quality (Section 3.3.2.2), a taxonomy of neutral variants (Section 3.3.2.3), an evaluation of mutational robustness across multiple languages (Section 3.3.2.4) and an evaluation of mutational robustness across all five program representations (Section 3.3.2.5).

3.3.2.1 Mutational Robustness Rates

Table~\ref{mutational-robustness-results} lists estimated mutational robustness for each of the 22 benchmark programs

Across all programs and representations we find an average mutational robustness of 36.8% and minimum software mutational robustness of 21.2%. These values are much higher than what might be predicted by those who view software as fundamentally fragile. They suggest that for real-world programs there are large numbers of alternate implementations which may easily be discovered through the application of random program mutations. Although actual rates of mutational robustness in biological systems are not available, these rates of software mutational robustness are in the range of mutational robustness thought to support evolution in biological systems [46].

There is little variance in mutational robustness across all software projects despite a large variance in the quality of test suites. At one extreme, even high-quality test suites (such as the Siemens benchmarks, which were explicitly designed to test all execution paths) and test suites with full statement, branch and assembly instruction coverage have over 20% mutational robustness. At the other extreme, a minimal test suite that we designed for bubble sort, which does not check program output but requires only successful compilation and execution without crash, has only 84.8% mutational robustness. This suggests that software mutational robustness is an inherent property of software and is not a direct measurement of the quality of the test suite.

3.3.2.2 Mutational Robustness by Test Suite Quality

Figure 2 shows the mutational robustness of the 22 benchmark programs broken into groups by the type and quality of test suite. The differences in test suites are qualitative in the source of the test suites and quantitative in the amount of coverage.

Figure 2: Mutational robustness by test suite quality. The simple sorting programs in~\ref{rb-class-sort} have complete AST node and ASM instruction coverage. The Siemens programs in~\ref{rb-class-siemens} have extremely high quality test suites incrementally developed by multiple software testing researchers, including have complete branch and def-use pair coverage. The Systems programs in~\ref{rb-class-open} have test suites that vary greatly in quality.

Qualitatively:

- Sorting

- (Panel~\ref{rb-class-sort}) The sorting test suites all share a single test suite. This test suite leverages the simplicity of sorting program specification and implementations to provide complete coverage with only ten tests.

- Siemens

- (Panel~\ref{rb-class-siemens}) The Siemens test suites were taken from the testing community where they have been developed by multiple parties across multiple publications until each executable statement, and definition-use pair was exercised by at least 30 tests [73,153].

- Systems

- (Panel~\ref{rb-class-open}) The systems test suites are taken directly from real-world open-source projects. These test suites are those used by the software developers to control their own development and consequently reflect the wide range of test suites used in practice.

Quantitatively the sorting test suite provides 100% code coverage at both the AST node and ASM instruction levels. The Siemens programs provide near 100% coverage and the systems programs provide 37.63% coverage on average, with a large standard deviation of 19.34%.

Despite the large difference in provenance and quality, the mutational robustness between panels differs by relatively little as shown in Table 1. The average values are within 17% of each other, and the minimums of each group differ by less than 1%.

| Sorting | Siemens | Systems | |

|---|---|---|---|

| Average Mut. Robustness | 26.7% | 29.8% | 43.7% |

| Minimum Mut. Robustness | 21.2% | 21.2% | 22.1% |

3.3.2.3 Taxonomy of Neutral Variants

To investigate the significance of the differences between neutral

variants and the original program we manually investigated and

categorized 35 neutral variants of the bubble sorting

algorithm. Bubble sort is chosen for ease of manual inspection

because of its simplicity of specification and of implementation.

We first generated 35 AST level random neutral variants of bubble sort. The phenotypic traits of these variants were then manually compared to the original program. The results are grouped into a taxonomy of seven categories as shown in Table 2.

| Number | Category | Frequency |

|---|---|---|

| 1 | Different whitespace in output | 12 |

| 2 | Inconsequential change of internal variables | 10 |

| 3 | Extra or redundant computation | 6 |

| 4 | Equivalent or redundant conditional guard | 3 |

| 5 | Switched to non-explicit return | 2 |

| 6 | Changed code is unreachable | 1 |

| 7 | Removed optimization | 1 |

The categories are listed in decreasing order of frequency. Only

categories 1 and some of the variants in category 5 affected the

output of the program, either by changing what is printed to STDOUT

or by changing the final ERRNO return value. Both affect program

output in ways that are not controlled by the program

specification or the test suite.

While some of the remaining five categories affected program output, all but category 6 and some members of category 4 affected the runtime behavior of the program. Category 2 includes the removal of unnecessary variable assignments, re-ordering non-interacting instructions and changing state that is later overwritten or never again read.

Many of the changes, especially in categories 2, 3, and 4, produce programs that will likely be more mutationally robust than the original program. These include changes which insert redundant and occasionally diverse control flow guards (e.g., conditionals that control if statements) as well as changes that introduce redundant variable assignments.

The majority of the neutral variants included in this analysis are semantically distinct from the original program. Only variants in categories 4, 5 and 6 could possibly have no impact on runtime behavior and could be considered semantically equivalent. Instead, the majority of neutral variants appear to be valid implementations of a program specification. In some cases these alternate implementations might have desirable properties such as increased efficiency through the removal of unnecessary code or increased robustness (e.g., the addition of new diverse conditional guards). Subsequent work by Baudry further explores the computation diversity of neutral variants [14], and finds sufficient computational diversity to support moving target defense [79].

3.3.2.4 Mutational Robustness across Multiple Languages

To address the question of whether these results depend upon the idiosyncrasies of a particular paradigm, we evaluate the mutational robustness of ASM level programs compiled from four languages spanning three programming paradigms (imperative, object-oriented, and functional). The results are presented in Table 3. The uniformity of mutational robustness across languages and paradigms demonstrates that the results do not depend on the particulars of any given programming language.

| C | C++ | Haskell | OCaml | Avg. | |

|---|---|---|---|---|---|

| Imp. | Imp. & OO | Fun. | Fun. & OO | ||

| bubble | 25.7 | 28.2 | 27.6 | 16.7 | 24.6±5.3 |

| insertion | 26.0 | 42.0 | 35.6 | 23.7 | 31.8±8.5 |

| merge | 21.2 | 46.0 | 24.9 | 22.7 | 28.7±11.6 |

| quick | 25.5 | 42.0 | 26.3 | 11.4 | 26.3±12.5 |

| Avg. | 24.6±2.3 | 39.5±7.8 | 28.6±4.8 | 18.6±5.7 | 27.9±3.1 |

3.3.2.5 Mutational Robustness across Multiple Representations

This section compares the mutational robustness of the four sorting programs implemented in C across all five program representations. The tests and program implementations used in this section are available online,10 as well as the code used to run the experiment,11 and the analysis.12

Each of the four sorting algorithms (bubble, insertion, merge,

and quick) are represented using each of the five program

representations (CLang, Cil, LLVM, ASM, and ELF).

Each of the resulting 20 program representations is then randomly

mutated and evaluated 1000 times.

| Representation | Software mutational robustness |

|---|---|

| CLang | 3.12 |

| Cil | 20.95 |

| LLVM | 35.05 |

| ASM | 35.23 |

| ELF | 15.78 |

Table 4 shows the average software mutational robustness across all four sorting algorithms broken out by representation. The highest level representation is CLang, which has by far the lowest level of software mutational robustness. The low mutational robustness of the CLang representation is likely an effect of the immaturity of the CLang representation and transformations. It is possible that these transformations do not accurately implement the intent of the program transformation operations. For example, an experienced CLang developer would likely produce functionally different delete or copy transformations of CLang ASTs than those defined in the library used herein.13

In general the lower the level of program representation the higher the software mutational robustness with the sole exception of the ELF representation which is fragile due to the need to maintain the overall genome length, and the inability to update literal program offsets.

Figure 2: Fitness distributions of first order mutations of sorting algorithms by program representation.

Figure 2 shows the distribution of fitness values across all levels of representation. Each fitness is equal to the number of inputs sorted correctly from a test suite of 10 inputs designed to cover all branches in each sorting implementation. As is the case in biological systems [122], the fitness distribution is bi-modal with one peak at completely unfit variants and another peak at neutral variants. This may help explain the relative stability of software mutational robustness across test suites of varying qualities found in Section 3.3.2.2.

In both biological and computational systems the bi-model fitness distribution may be beneficial, because partially fit solutions are often particularly pernicious (cf. anti-robustness [42]). Cancer cells in biological systems are not neutral are able to survive and even thrive, and unfit variants in computational systems which are able to pass incomplete test suites are the most likely to cause problems for end users. It is not yet clear if similar causes underlay this bi-modal distribution in biological and computational systems.

3.4 Software Neutral Networks

3.4.1 Span of Neutral Networks

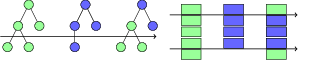

The previous experiments measured the percentage of first-order mutations that are neutral. This subsection explores the effects of accumulating successive neutral mutations in a small assembly program. We begin with a working assembly code implementation of insertion sort. We apply random mutations using the ASM representation and mutation operations. After each mutation, the resulting variant is retained if neutral, and discarded otherwise. The process continues until we have collected 100 first-order neutral variants. The mean program length and mutational robustness of the individuals in this population are shown as the leftmost red and blue points respectively in Figure~\ref{neut-rand-no-limit}. From these 100 neutral variants, we then generate a population of 100 second order neutral variants. This is accomplished by looping through the population of first-order mutants, randomly mutating each individual once retaining the result if it is neutral and discarding it otherwise. Once 100 neutral second-order variants have been accumulated, the procedure is iterated to produce higher-order neutral variants. This process produces neutral populations separated from the original program by successively more neutral mutations. Figure 2 shows the results of this process up to 250 steps producing a final population of 100 neutral variants, each of which is 250 neutral mutations away from the original program.

Figure 2: Random walk in neutral landscape of ASM variations of Insertion Sort.

The results show that under this procedure software mutational robustness increases with the mutational distance away from the original program. This is not surprising given that at each step mutationally robust variants are more likely to produce neutral mutants more quickly, and the first 100 neutral mutants generated are included in the subsequent population. We conjecture that this result corresponds to the population drifting away from the perimeter of the program’s neutral space. Similar behavior has been described for biological systems, where populations in a constant environment experience a weak evolutionary pressure for increased mutational robustness [176,180]. The average size of the program also increases with mutational distance from the original program (Figure~\ref{neut-rand-no-limit}), suggesting that the program might be achieving robustness by adding “bloat” in the form of useless instructions [147]. To control for bloat, Figure~\ref{neut-rand-limit} shows the results of an experiment in which only individuals that are the same size or smaller (measured in number of assembly instructions) than the original program are counted as neutral. With this additional criterion, software mutational robustness continues to increase but the program size periodically dips and rebounds, never exceeding the size of the original program. The dips are likely consolidation events, where additional instructions are discovered that can be eliminated without harming program functionality.

This result shows that not only are there large neutral spaces surrounding any given program implementation (in this instance, permitting neutral variants as far as 250 edits removed from a well-tested \(<200\) LOC program), but they are easily traversable through iterative mutation. Section 3.5 analytically explores the possible sizes of these neutral spaces. Figure~\ref{neut-rand-limit} shows a small increase in mutational robustness even when controlling for bloat. Further experimentation will determine if these results generalize to a more diverse set of programs subjects.

3.4.2 Higher Order Neutral Mutants

The previous section demonstrates the ability of automated techniques to explore neutral networks by continually applying single mutations to neutral variants. While this demonstrates the span of neutral networks far from an original program, it does not address questions of the density of neutral variants in the space of all possible programs (cf. program space Section 3.5).

To address this question we apply multiple compounding random mutation operations to an individual without performing intervening checks for neutrality. Such higher-order random variants take random walks away from the original program in the space of all possible programs. We then evaluate the percentage of these higher order variants at different distances from the original program to see how the rate of neutral variants changes with mutational distance.

We compare the rate of neutral variants along random walks to the rate of neutral variants found along neutral walks which use the fitness function to ensure the walk remains within the program’s neutral network as in Section 3.4.1. This empirically confirms the utility of fitness functions in the search for neutral variants, as opposed to suggested alternatives such as random search [65].

Finally we manually examine some interesting higher-order neutral variants which have non-neutral ancestors, and discuss the implication of the low rate of such variants discovered through random search.

3.4.2.1 Experimental Design

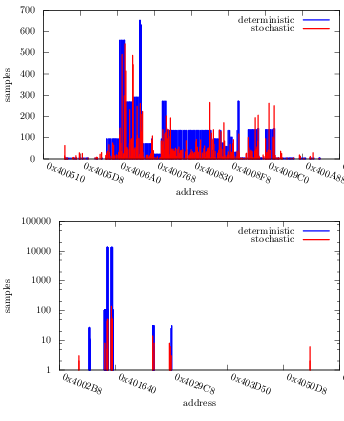

Using the ASM representation, an initial population of \(2^{10}\) is generated of first-order neutral variants of an implementation of quicksort in C.14 This population is allowed to drift (Section 2.1) by iteratively selecting individuals from the population, mutating them and inserting only the neutral results back into the population. The result is a neutral exploration which maintains the population size of \(2^{10}\) until a fitness budget of \(2^{18}\) total fitness evaluations has been exhausted. The fitness and mutational path from the original program is saved for every tested variant including non-neutral variants.

The distribution of the number of individuals tested at each number of mutations from the original during the neutral search is saved. A comparison population of \(2^{18}\) random higher-order variants is generated by repeatedly drawing from the distribution of mutational distances resulting from the neutral search, and for each drawn distance generating a random higher-order variant with the same order, or mutational distance from the original. This results in two collections of \(2^{18}\) variants with the same distribution of mutational distances from the original program, one generated through neutral search and the other through random walks. These distributions are shown in Figure 2.

Figure 2: The distribution of mutational distance from the original for populations of higher-order variants collected using two methods: random walks and guided neutral walks.

The software and input data used to perform this experiment is available online.15 The analysis is also available online.16

3.4.2.2 Neutrality of Random Higher-Order Neutral Variants

Figure 2: Neutrality of random higher-order mutants by the number of mutations from the original.

As shown in Figure 2 the experimental levels of neutrality at two, three, and four random mutations removed from the original almost exactly match an exponential function decreasing at a rate equal to the mutational robustness of the original program. The highest order random neutral variant found in this experiment was 8 mutations removed from the original.

Exponential decay models the number of higher-order neutral variants which will be found through random walks which are neutral at every intermediate step. The very high correlation between exponential decay and the rate of neutrality decrease along random walks indicates that most higher-order neutral variants are the product of neutral lower-order ancestors. This may be an effect of the very high dimensionality of program spaces (cf. dimensionality Section 3.5), or of the difference in effective dimensionality between the neutral space and the program space.

3.4.2.3 Comparative Neutrality along Random and Guided Walks

Figure 2 shows the comparative neutrality by mutational distance up to 100 mutations from the original program for both random and guided walks through program space. By contrast the neutrality increases along guided neutral walks. This agrees with the results presented in Section 3.4.1. By the sheer increase in viable higher-order neutral variants found through neutral search as compared to random search, 28,055 and 1,342 respectively, these results support the utility of a fitness function in guiding search for higher-order neutral variants.

Figure 2: The neutrality by mutational distance from the original program for both guided neutral and random walks through program space.

3.4.2.4 Analysis of Interesting Random Higher-Order Neutral Variants

| Higher-Order Neutral Variants | |||

|---|---|---|---|

| Order | Total | Interesting | Percentage |

| 2 | 33787 | 139 | 0.41% |

| 3 | 12458 | 153 | 1.23% |

| 4 | 4478 | 107 | 2.39% |

| sum | 50723 | 399 | 0.79% |

Table 5 shows the percentage of a large collection of over 50,000 randomly generated higher-order neutral variants which are “interesting,” meaning that they have ancestors which were not themselves neutral. These variants are the results of random walks that wander off of the neutral network, and then subsequently wander back on. As can be seen the majority of randomly generated neutral variants are the result of random walks that never leave the neutral network. This agrees with the results found in Figure 2.

Note that the percentage of neutral variants which are interesting (column “Percentage” in Table 5) increases as the order increases because the number of random intermediate steps increases, each of which has a chance of being non-neutral.

The interesting variants may be further separated into those whose step back onto the neutral network in a reversion of a previous mutation (i.e., a “step back”), and those who return to a different position in the neutral network than the spot of their exit. We find that roughly half \(\frac{199}{399}\) are not the result of such a reversion or a “step back”. Of these many have fitness histories in which their ancestor’s fitness values drop to zero or near zero and then slowly climb or suddenly jump back to neutral.

Such cases of random walks which leave and then re-enter the neutral network in a new place may actually find portions of the neutral network which are unreachable or are very far removed from the original program when restricted to neutral walks. The extreme size of these networks make this possibility difficult to determine experimentally.

Although these results indicate that it is possible to find new (possibly unconnected) portions of the neutral network using random walks, such interesting random walks remain very rare. With 6% of random higher-order variants between 2 and 4 mutations removed from the original being neutral, 0.79% of those being interesting and roughly 50% of those not being reversions we have \(0.06 \times 0.0079 \times 0.5 = 0.0002\) or 0.02% of all randomly generated higher-order mutants are truly interesting. This result motivates the technique used in this Chapter and in Chapter 4 of restricting explorations of program space to neutral networks in which functional neutrality is used as the acceptance criteria for every step in the walk.