November 22, 2013

Network Analysis and Modeling (CSCI 5352)

This semester I developed and taught a new graduate-level course on network science, titled Network Analysis and Modeling (listed as CSCI 5352 here at Colorado). As usual, I lectured from my own set of notes, which total more than 150 pages of material. The class was aimed at graduate students, so the pace was relatively fast and I assumed they could write code, understood basic college-level math, and knew basic graph algorithms. I did not assume they had any familiarity with networks.

To some degree, the course followed Mark Newman's excellent textbook Networks: An Introduction, but I took a more data-centric perspective and covered a number of additional topics. A complete listing of the lecture notes are below, with links to the PDFs.

I also developed six problem sets, which, happily, the students tell me where challenging. Many of the problems were also drawn from Newman's textbook, although often with tweaks to make the more suitable for this particular class. It was a fun class to teach, and overall I'm happy with how it went. The students were enthusiastic throughout the semester and engaged well with the material. I'm looking forward to teaching it again next year, and using that time to work out some of the kinks and add several important topics I didn't cover this time.

--

Lecture 1,2 : An introduction and overview, including representing network data, terminology, types of networks (pdf)

Lecture 3 : Structurally "important" vertices, degree-based centralities, including several flavors of eigenvector centrality (pdf)

Lecture 4 : Geometric centralities, including closeness, harmonic and betweenness centrality (pdf)

Lecture 5 : Assortative mixing of vertex attributes, transitivity and clustering coefficients, and reciprocity (pdf)

Lecture 6 : Degree distributions, and power-law distributions in particular (pdf)

Lecture 7 : Degree distributions, and fitting models, like the power law, to data via maximum likelihood (pdf)

Lecture 8 : How social networks differ from biological and technological networks, small worlds and short paths (pdf)

Lecture 9 : Navigability in networks (discoverable short paths) and link-length distributions (pdf)

Lecture 10 : Probabilistic models of networks and the Erdos-Renyi random graph model in particular (pdf)

Lecture 11 : Random graphs with arbitrary degree distributions (the configuration model), their properties and construction (pdf)

Lecture 12 : Configuration model properties and its use as a null model in network analysis (pdf)

Lecture 13 : The preferential attachment mechanism in growing networks and Price's model of citation networks (pdf)

Lecture 14 : Vertex copying models (e.g., for biological networks) and their relation to Price's model (pdf)

Lecture 15 : Large-scale patterns in networks, community structure, and modularity maximization to find them (pdf)

Lecture 16 : Generative models of networks and the stochastic block model in particular (pdf)

Lecture 17 : Network inference and fitting the stochastic block model to data (pdf)

Lecture 18 : Using Markov chain Monte Carlo (MCMC) in network inference, and sampling models versus optimizing them (pdf)

Lecture 19 : Hierarchical block models, generating structurally similar networks, and predicting missing links (pdf)

Lecture 20 : Adding time to network analysis, illustrated via a dynamic proximity network (pdf)

--

Problem set 1 : Checking the basics and a few centrality calculations (pdf)

Problem set 2 : Assortativity, degree distributions, more centralities (pdf)

Problem set 3 : Properties and variations of random graphs, and a problem with thresholds (pdf)

Problem set 4 : Using the configuration model, and edge-swapping for randomization (pdf)

Problem set 5 : Growing networks through preferential or uniform attachment, and maximizing modularity (pdf)

Problem set 6 : Free and constrained inference with the stochastic block model (pdf)

posted November 22, 2013 11:00 PM in Teaching | permalink | Comments (1)

January 13, 2012

A crisis in higher education?

Attention conservation notice: 3200 cranky words on the PhD over-supply "crisis."

Higher education is always having crises, it seems. Some of this is probably a kind of marketing strategy, because genuinely serious problems are so systemic and slow-moving that it's easy to ignore them, or because you can't get people to pay attention in today's saturated media environment without a large dose of hyperbole. But, one "crisis" in particular did catch my attention over the past few years: the harsh market faced by relatively new PhDs seeking faculty jobs [0]. Nature did a full spread on the future of the PhD, The Economist weighed in with their own coverage, and I'm sure the Chronicle of Higher Education has done a number of stories on the topic. Now, Online PhD has done its own report, in the form of a slick infographic packed with grim factoids and numbers.

What most of these perspectives miss, and what makes some of their analysis a little shrill, is the historical context of higher education and its growth trajectory over the past 70 years [1]. The overall upward trend in PhD production over this time period can be blamed on huge increases in federal funding for research, on huge growth in the number of students getting undergrad degrees, on a vast broadening of higher education as a whole and on intensified competition between research-oriented universities.

The role of competition, I think, is under appreciated: many more universities now produce PhDs and many more programs are genuinely good than was the case before federal funding for higher education began surging after World War II. The result is a larger and more competitive market for those PhDs, especially the ones produced by the best programs. (The same is true for funding sources: the pie has grown, but the number of people competing for a slice has grown much faster.) In many ways, this is a good thing for higher education overall, since students can receive a good or even a great education at a wider variety of places. That is, higher education is probably genuinely less elitist and genuinely more accessible and inclusive. There's also a cost, however, in brutal treatment that even successful candidates experience on the market and in the colossal waste of human potential from the many talented individuals who fail to find good jobs. (More on "good" jobs in a moment.)

That being said, increased production of PhDs doesn't necessarily mean increased competition. If the number of tenure-track faculty positions increases at the same rate as the production of PhDs, then in principle competition could remain flat. This point gets a lot of attention in the popular discussion and the argument is often that if only we could increase the number of tenure-track lines, everything would be great. But this obscures the complexity of the problem. First, faculty lines are largely paid for by undergraduate (or professional degree) tuition [2], so increasing the size of the faculty requires increasing the size of the undergraduate population, which has its own problems. [3] Second, part of the modern National Science Foundation's mandate is actually to overproduce graduate students [4], and this is largely at the behest of Congress [5]. Alternatively, we could solve the over-supply of PhDs by reducing the overall production, but this would negatively impact the amount of research being produced (since it's largely done by PhD students), the teaching of many departments (again, often done by PhD students) and would reduce the supply of highly educated individuals to non-academic professions.

Third, not all tenure-track positions are equally desirable, not all PhDs are equally competitive, and growth in the most desirable slots and most competitive people has not been uniform. This is a tricky problem to explain but let's put it this way: I would not be surprised to learn that the 10 best programs in the country (in any particular field) collectively produce enough PhDs each year to fill every advertised faculty line at every other university, even the not-so-great ones [6]. This means that the lack-of-tenure-track-jobs / overproduction-of-PhDs "crisis" is not one that everyone feels equally, which complicates the conclusion that it is universally a problem. In fact, a tight job market for faculty positions has some benefits, at least collectively. One is that lower-quality places can pick up relatively better qualified people than they would if the top-ranked departments had enough extra lines to hire all the top applicants. Over time, an over-supply of good PhDs may be necessary to raise the quality of the worst-performing institutions, although this effect may only be observable in the long run. [7]

Fourth, the history of higher education as an industry is a series of large expansions and contractions, and the effects of these are often felt and distributed unevenly [8]. Life and job prospects for faculty in expanding fields are good, but are hard during contractions. (These effects are surely amplified for young scholars and so one possibility would be better knowing and advertising the true employment prospects for graduates; but maybe not [9].) It's not entirely clear to me that academia is actually experiencing a contraction, despite the federal budget travails. A more truthful statement may be that higher education is restructuring, which brings us to the issue of "good" jobs versus "bad" jobs.

It's true that universities (at least in the US) are increasingly made up of two types of faculty, those either with or who are eligible for tenure ("tenure track"; a population that is, at best, growing fairly slowly) and those without or who can never receive tenure (teaching faculty, adjuncts, etc.). The latter group is much larger now than it used to be, but it's not very well integrated into the decision-making systems of universities, and this, I think, leads to some level of systemic abuse. In the long run, it seems likely that these groups will become better integrated into the decision-make system, which will reduce the abuse [10]. But a more interesting question, I think, is why has this population grown so much so recently?

The role that universities play in society is changing, and I think the growth of these lower-quality jobs reflects this shift. The US economy overall has shifted significantly toward service-sector jobs and the growth in adjunct and teaching positions at universities should probably be seen as the higher education equivalent. This may be driven in part by the commoditization of a bachelors degree (which is primarily what non-tenure-track faculty help produce), which society has demanded and the universities have embraced (especially the big public universities and the non-endowed private colleges, where increased enrollment means increased tuition revenue). For their part, colleges and universities are figuring out that they can produce an apparently equivalent "product" at significantly lower cost by relying more heavily on non-tenure track faculty [11,12]. It seems telling that losses of tenure-track lines are often at colleges and universities well below the "top tier", where the struggle for product differentiation and the negative consequences of price competition are likely stronger. So, it seems reasonable to expect growth in these "bad" jobs in places where the service rendered (education provided) is less specialized, e.g., entry- or lower-level undergraduate classes where the material is highly standardized and probably does not require the best of the best minds to teach.

Another aspect is that tenure is not just about protecting faculty from being fired for political reasons. Tenure also allows universities to fulfill their mission toward preserving knowledge because tenured faculty will be around for a long time, communicating their vast and detailed knowledge to the next generation. Eliminating tenure lines may basically mean that an institution is giving up some or all of its commitment to the knowledge preservation mission. This is surely a loss for society as a whole, but it does raise the interesting question about which institutions are best positioned to fulfill that mission -- it may be that the institutions who are giving up on it were not doing a very good job at it in the first place. The fact that tenure lines are mainly (but not always) being lost from the lower-ranked institutions suggests that the top places are largely still committed to this mission, even if they are retrenching to some degree (perhaps because of the shifting demands on bachelor degree production described above).

So, let's take stock. Is there a crisis? Not in the usual definition of the word, no. But, there are serious issues that we should consider, and these tap deep into both the mission and purpose of higher education and its relationship to society as a whole.

The good things for society about the current system are that the over-supply of PhDs produces a steady stream of highly educated people for other industries and government to use. The over-supply means that low-quality departments will tend to improve over time because they can hire better people than their peers tend to produce. The over-supply also means that the best or most desirable departments will also tend to improve over time because they can select their new hires from the very best of the very best. For scholarship in general, this is a good thing. The over-supply means that academia has a large supply of low-cost skilled labor (graduate students) for producing research, educating younger students, etc. And, the over-supply means that academia has an adequate supply of potential faculty to facilitate restructuring needs, i.e., responding to the changing demands from society and the changing roles of universities.

The bad things are that the over-supply is a colossal waste of human potential for people who aspire to be faculty but who ultimately fail to find employment. For instance, many very talented individuals will spend substantial time in low-paying, low-benefits temporary employment (graduate students, postdocs, adjuncts, research faculty positions, etc.) only to discover years or decades later that these years are now counted against them on the job market (and not just in the academic market). The over-supply makes the individual experience of finding a job fairly brutal and with a high error rate (many people who should get faculty jobs do not [13]). Success also comes with a cost in the form of moving a very large distance (the faculty job market is one of the few truly national labor markets). The over-supply has made it easy for susceptible colleges and universities to slowly replace their tenure track faculty with non-tenure faculty with less autonomy, less security, lower pay and lower benefits, which ultimately means these institutions basically abandon one of their missions: preserving human knowledge. It also makes the institution less democratic, which likely has a negative impact on the campus culture and the educational environment.

Just as this situation did not appear suddenly, I don't think it will change significantly in the near future. Although Congress is a powerful voice in higher education, and has had a direct role in creating the over-supply, the large and complex ecology of higher education institutions, society itself and the economy as a whole are also key players. What happens will depend on their interactions, and lobbying Congress alone may lead to unexpected and undesirable results [14]. In the near term, I think the over-supply will persist (and if anything the job market will become even more competitive, but again this is not a completely bad thing), the number of non-tenured positions will continue to increase (mainly at lower-ranked institutions or for teaching intro classes at the higher-ranked places), undergraduate degrees will become even more comoditized, and the mission of knowledge preservation will be increasingly concentrated among the better or more financially stable institutions.

One long-term consequence is a partitioning of the faculty at research universities into "research" faculty (tenure-track faculty who do research and teach mainly graduate and upper-level undergraduate courses, of which I am one) and "teaching" faculty (non-tenure track faculty who teach heavy course loads of lower-level undergraduate classes), but that does seem like the way things are going [15]. I wish that research universities (and tenure-track faculty) would treat the non-tenure groups with more respect and include them more directly into the decision-making processes. And, I hope that we can find better ways of encouraging the very best young scholars to stick with it, even though the system will likely become only more brutal in the future [16].

To end on a more positive note, one genuinely beneficial thing we as academics could do would be to encourage our PhD students to consider non-academic trajectories. That is, I don't think we should view the PhD as being exclusively an academic degree, and we could strive to teach our PhD students a combination of both academic and practical skills. This would increase their options on the job market, which may reduce the overall brutality that individuals currently experience.

-----

[0] Partly because I was in that market myself. And, now being in a tenure-track position at a good university, I'm lucky enough to be on the other side of that harrowing process. Had I written this a couple of years ago, I'm sure I would have said slightly different things.

[1] These are well covered by Roger Geiger's excellent and authoritative books on the evolution of the American university system, in the post-war period and since 1990. These books are highly recommended. Geiger takes what should be a dry and boring subject and makes it a fascinating and insightful story.

[2] This is true at both public and private universities. The only place it's less accurate is in medical research schools, where faculty lines are often funded out of "soft" money from research grants. (Some are still funded from medical school tuition revenue, so the coupling with student populations is not eliminated.) The result is that these faculty lines are mainly sensitive to changes in federal funding levels.

[3] Another complicating factor is that tenure lines are traditionally tied to departments, and their number depends on student demand for those courses offered by that department. That is, teaching is still a labor-constrained activity. The division of that labor into departments means that growth in faculty lines is driven by changes in the popularity of different disciplines. The benefits for the faculty job market created by overall growth in student enrollments will thus be distributed unevenly.

There are at least two factors that decouple the number of faculty lines and the popularity of the field: tenure, which means departments tend to shrink very slowly in response to decreasing popularity while the reverse is not true, and the commitment that all universities have to the preservation and production of knowledge, which means even an unpopular department may be maintained as a kind of cultural memory device.

[4] This is done partly through direct support to students (about 15% of the budget) and partly through grants (50% of the budget); typically, large portions of grants are in fact used to support graduate students by paying them as research assistants.

[5] Apparently, NSF has always struggled to justify its budget to Congress, who generally has an uncomfortable relationship with the idea of supporting basic research for the sake of humanity. For NSF, historically "supporting education," and more recently "supporting economic growth" (a.k.a. technology transfer), have been a successful arguments, and these are reflected in funding priorities.

[6] This is almost surely true in Computer Science, where some of the best programs are also some of the largest. For example, MIT and CMU collectively have about 250 faculty; if they each produce a single graduate each year, that would be enough to place one person at each of the other 200-odd PhD-granting Computer Science departments in North America. The per-faculty production rate is probably not so high, the overall volume may be so if we account for other top places like Stanford, Washington, Princeton, etc. If we include the fact that not every department hires every year, it seems entirely reasonable that the top 10 places could fill the entire annual market demand themselves.

[7] This effect probably happens faster for newer fields, e.g., complex systems. The best universities are all fairly sensitive to their perceived prestige and quality, and for them, it doesn't make strategic sense to risk their current standing with risky investments in untested fields. This means that lower-ranked universities who place smart bets can move up (at least during the time it takes for a field to become established enough that the top places start poaching the best people).

[8] Physics experienced a long expansion, but that had largely run its course in the United States by the time Congress trashed the Superconducting Super Collider in 1993. In contrast, biomedical research has been expanding fairly steadily for 70 years, which is probably why it dominates federal science budgets. The "golden age" of science was really the post-war and Sputnik eras, when federal spending was expanding faster than universities could satisfy the demand for research. The 1970s were apparently a fairly broad contraction, because Congress moved to limit the growth in science budgets (for instance, NASA's budget peaked in the early 1970s) and because student enrollment growth tempered. Since then, the expansions and contractions have been less even.

[9] On the other hand, had anyone convincingly explained to my younger self just what life would be like in my first few years as a professor, I may have decided to try a different career path. More generally, ignorance may be a crucial part of what makes the whole system work: it allows us to unknowingly take foolish risks that sometimes yield truly remarkable, or at least highly improbable, results. At the collective level, youthful foolishness may be essential to keeping the quality of the faculty high despite the brutality of the career path.

[10] Of course, in the meantime it's terrible that some institutions and individuals are taking advantage of the current powerlessness of these groups. They can and should be integrated into the academy and given a voice.

[11] Some graduate and professional degrees also show evidence of becoming commodities, for instance, MBAs. It's not clear that PhDs are facing similar pressures, although in my darker moments I believe it.

[12] From this perspective, things like Stanford's free online courses may be a truly disruptive innovation. They offer the possibility of dramatically lowered cost, dramatically increased "production" and they seem to require a currently specialized set of skills. Of course, their success could destroy what remains of the tenure track at smaller or less unique institutions.

[13] As I've learned, it's a highly stochastic and error-prone process. Departments tend to decide ahead of time to hire in a particular area, and this means top-notch candidates from outside that area are potentially passed over for less-top-notch candidates within the target area. The decision of which area to hire in is often driven by internal politics (which "group" is stronger, which has a louder voice, "who's turn" it is) or existing curricular needs rather than meritocratic or strategic concerns. And, even within a given area, it can be difficult to accurately access true merit and relative quality, particularly for junior positions where each candidate's track record is, by definition, relatively short.

Did I mention that I'm reading PhD applications this week? Ugh.

[14] It certainly has in the past.

[15] Ironically, dividing the teaching and research between different groups of faculty is mostly how the Germans used to do things. Now, the Germans are Americanizing their system to some degree, while we Americans seem to be Germanizing ours.

[16] From my perspective, "early career" funding, fellowship and other young faculty support mechanisms seem to be wholly inadequate (in size and scope) and the easiest way to get them is to propose highly incremental, highly risk-averse research. This does not seem to be serving the right goals or to be teaching young faculty the right lessons about scholarship.

posted January 13, 2012 10:26 AM in Simply Academic | permalink | Comments (8)

October 22, 2009

National Computer Science Education Week, or: It's About Time

Is it cliche to say "it's about time"?

The ACM, with Microsoft, Google, Intel and some other organizations, managed to persuade the US Congress that Computer Science is a Good Thing(tm) and that it deserves some recognition for driving economic growth (you know, making things like medicine, movies, music, and cars) [1]. To recognize the goodness, Congress passed a resolution (H. RES. 558) to designate the week of December 7 as “National Computer Science Education Week.” [2]

The resolution, H. RES. 558, sponsored by Congressmen Vernon Ehlers (R-MI) and Jared Polis (D-CO) [3], designates the week of December 7 as “National Computer Science Education Week.” Citing the influence of computing technology as a significant contributor to U.S. economic output, the House resolution calls on educators and policymakers to improve computer science learning at all educational levels, and to motivate increased participation in computer science.

“Increasing energy efficiency, advancing healthcare, and improving communication in the digital age are just a few of the national priorities that depend on computer science, which Congress has recognized. Computer science teaches students design, logical reasoning, and problem-solving – all skills that have value well beyond the classroom,” said Rick Rashid, senior vice president of Research for Microsoft.

“Despite serious economic challenges confronting the nation, computer science-related jobs are among the fastest-growing and highest paying over the next decade,” said Alfred Spector, vice president of Research and Special Initiatives at Google, Inc. “These times require an increasing supply of diverse students exposed to rigorous and engaging computing courses at the K-12 level, and National Computer Science Education Week can help to reinforce this effort.”

Good fanfare, and good effort for sure. It's a small gesture really, but I guess it does give organizations like the ACM a hook to hang their public campaigns on. And for sure, education about computers, computer science, and their use (and abuse) in society is something the public could do with some educating on.

Tip to Tanya Berger-Wolf.

-----

[1] Thankfully, they didn't mention that computer science and computers have also produced massive amounts of wasted time, the estimation of which never ceases to amuse me. (If you'd like to estimate it for yourself, try this.)

[2] In a fit of gender-neutrality (something Computer Science is not known for), the date was chosen to honor Grace Hopper, who wrote the first compiler and helped invent the indispensable COBOL, in addition to being a Rear Admiral in the Navy, and having a Naval destroyer named after her.

[3] Incidentally, I was very happy to discover that Mr. Polis represents the 2nd District of Colorado, and he'll be my representative once I move to Boulder next summer.

posted October 22, 2009 01:19 PM in Computer Science | permalink | Comments (0)

January 31, 2009

Crowdsourcing to Africa

It's a high honor to be slashdotted, so congrats to my friend and colleague Nathan for being featured yesterday:

Technology Review has an article about a startup that wants to build a business out of crowd-sourcing the developing world. The company, called txteagle, seems to be interested mainly in using local knowledge to translate information into less common languages. The Finnish cell-phone company Nokia is a partner in the project, and CEO Nathan Eagle says that it provides a good example of a Western company that could benefit from txteagle workers. Eagle explains that Nokia is interested in 'software localization,' or translating its software for specific regions of a country. 'In Kenya, there are over 60 unique, fundamentally different languages,' he says. 'You're lucky to get a phone with a Swahili interface, but even that might be somebody's third language. Nokia would love to have phones for everyone's mother tongues, but it has no idea how to translate words like "address book" into all of these languages.'

Nathan and I have chatted several times about txteagle. The basic idea is similar to Amazon's Mechanical Turk, but rather than crowdsource any task to anyone with a computer, txteagle is focused on simple tasks that can be done on a cell phone. The tasks will still be those that require a human-style intelligence (that is, a task like image recognition or language translation that requires semantic knowledge of human culture and preferences), but being able to do them on a mobile phone limits their complexity to some degree.

posted January 31, 2009 12:20 AM in Computer Science | permalink | Comments (0)

August 22, 2007

Seam carving and image resizing

This technique for image resizing (which appeared in SIGGRAPH 2007) is extremely cool. I particularly like the erasure feature - that could come in handy for removing former friends or ex-girlfriends from favorite photos. I predict it will also usher in a whole new era of crafty image manipulation for political reasons... politicos can just erase themselves from the incriminating photos!

In the paper (reference given below), the authors note that the seam carving method for removing (or adding) pixels performs poorly when there aren't many places with "low content" and describe an example with human faces. These sensitive areas can be protected (forcing the seams to avoid them), but there are limits as to how much carving can be done when there are a lot of places that you want to protect. All in all, an impressively clever approach to image resizing.

S. Avidan and A. Shamir, "Seam Carving for Content-Aware Image Resizing." SIGGRAPH (2007). [Warning: pdf is about 21MB in size; alternative source (via ACM).]

Update 1 February 2008: The New York Times is now (finally) running an article on popular versions of this technique, and its implications for photo art. (Tip to Jake)

posted August 22, 2007 08:45 AM in Computer Science | permalink | Comments (0)

June 29, 2007

Announcement: DIMACS/DyDAn Workshop on Computational Methods for Dynamic Interaction Networks

While chatting recently with Martin Rosvall, I realized that it might actually be useful (gasp!) if I were to post information about workshops and conferences on complex networks that I hear about. So, in the interest of having this blog serve at least one additional purpose other than being my own personal bully pulpit, I'll try to post announcements as I receive them. Also, to those of you who are plugged into these things, you could help out by sending me your own workshop and conference announcements.

Without further ado, here's the first of the bunch coming up in the Fall. The paper submission deadline is already upon us (Sunday, July 1st) but DIMACS has a good track record of running good workshops, so maybe some folks will find it worthwhile to attend. Update 29 June: Deadline has been extended to July 8th, and I'm told there will be some support available for junior folks to attend.

DIMACS / DyDAn Workshop on Computational Methods for Dynamic Interaction Networks

September 24 - 25, 2007 at the DIMACS Center, CoRE Building, Rutgers University

Organizers: Tanya Berger-Wolf (UIUC), Mark Goldberg (RPI), Malik Magdon-Ismail (RPI), Fred Roberts (DIMACS) and William "Al" Wallace (RPI).

Description: A substantial body of research in various sciences aims at understanding the dynamics and patterns of interactions within populations, in particular how social groups arise and evolve. As a result of the advances in communications and computing technology, extreme amounts of data are being accumulated representing the evolution of large scale communication networks, such as the WWW, chatrooms, Blogs, and networks of bluetooth enabled handheld devices. Moreover, as small sensors become largely available and affordable, new research areas are exploiting the social networks resulting from those sensor networks data. Finding patterns of social interaction within a population has been addressed in a wide range applications including: disease modeling cultural and information transmission, intelligence and surveillance, business management, conservation biology and behavioral ecology.

The workshop will focus on two complementary themes. On one hand it will address the emerging importance of electronic communication networks, their social implications and how those facilitate the organization and coordination of activities of social groups. The second theme of the workshop is adapting and extending the computational methods developed in the context of communication and computer networks to the social interaction networks.

Topics:

- Modeling and simulation of dynamic social networks

- Measurement and comparison of dynamic social networks

- Community and social structure identification

- Identification of individual roles and behavioral patterns

- Visualization of large dynamic networks

Update 13 August: Here is the program. I'll be presenting a paper at this workshop entitled "Persistence and periodicity in a dynamic proximity network", which is joint work with Nathan Eagle (currently of MIT, but soon to be joining SFI), and considers the real-time dynamics of a human proximity network.

posted June 29, 2007 02:08 PM in Conferences and Workshops | permalink | Comments (0)

June 17, 2007

Mathematics of Sudoku

Long-time readers and friends of mine who have had the unfortunate luck to ask me about Sudoku will know that I'm not very fond of the game itself. In fact, I've never completed one by hand (even an "easy" one) because I get bored from running a constraint satisfaction algorithm algorithm by hand. Instead, for a project in one of my courses in graduate school, I instead wrote a computer program to solve them for me. (I wrote two versions, one that implements a brute-force backtracking algorithm (in prolog) and one that implements a constraint satisfaction algorithm (in ciao), and did a little write up to explain them. The code will actually solve an arbitrary sized puzzle, and not just the regular 9 x 9 ones you find in newspapers.)

The last time I blogged about Sudoku was to talk about the interesting mathematical or theoretical questions about the game. Things like, given a partially-completed puzzle, how many unique solutions exist? For a given solution, how many unique partially-completed puzzles exist with a given number of entries provided for you? These probably aren't the kinds of questions most people ask when they sit down to solve a Sudoku puzzle, since they're interesting at a higher level than coming up with a solution to a particular instance.

Fortunately, mathematicians have been thinking about these kinds of questions for a couple of years now, and an article in the June/July 2007 issue of the Notices of the American Mathematical Society (AMS) by Agnes M. Herzberg and M. Ram Murty delves into these with great enthusiasm. In particular, they show that Sudoku puzzles can be reduced to a graph coloring problem. Each of the 81 boxes in the puzzle are nodes in a graph (network), and two nodes are connected if they appear in the same row, column or sub-grid. Then, each of the numbers 1..9 is assigned a color, and the task is to come up with a coloring of the graph such that no edge has the same color on both ends. For regular instances, some of the colors are given, and your task is to complete the coloring.

The nice thing about this reformulation is that it makes the questions I mentioned above amenable to some of the tools from mathematics and computer science for dealing with the general problem of graph coloring (e.g., chromatic polynomials and other things I wish I understood better). For instance, the authors are able to prove that although there are roughly 6.671 x 10^21 valid Sudoku squares (a trivially small fraction of the number of Latin squares, which is about 5.525 x 10 ^27!), only a mere 5,472,730,538 are unique (when you account for symmetries and relabelings). That number alone should keep puzzle fans and newspapers busy for quite a while. I wonder if we'll ever see the day when newspapers print puzzles that ask players to find both of two unique solutions, or to count the number of unique solutions for a particular puzzle. In spite of my ongoing dislike of the Sudoku craze gripping the country, I should at least take comfort in knowing that people everwhere are exercising the rational side of the brain, and a few may even be pondering the deeper mathematical mysteries of the game.

(tip to Ars Technica for their coverage.)

posted June 17, 2007 08:00 AM in Computer Science | permalink | Comments (0)

May 27, 2007

DS07

This week, I'm in Snowbird, UT for SIAM's conference on Applications of Dynamical Systems (DS07). I'm here for a mini-symposium on complex networks organized by Mason Porter and Peter Mucha. I'll be blogging about these (and maybe other) network sessions as time allows (I realize that I still haven't blogged about NetSci last week - that will be coming soon...).

posted May 27, 2007 11:38 PM in Scientifically Speaking | permalink | Comments (1)

May 21, 2007

NetSci 2007

This week, I'm in New York City for the International Conference on Network Science, being held at the New York Hall of Science Museum in Queens. I may not be able to blog each day about the events, but I'll be posting my thoughts and comments as things progress. Stay tuned. In the meantime, here's the conference talk schedule.

posted May 21, 2007 11:41 AM in Scientifically Speaking | permalink | Comments (0)

IPAM - Random and Dynamic Graphs and Networks (Days 4 & 5)

Two weeks ago, I was in Los Angeles for the Institute for Pure and Applied Mathematics' (IPAM, at UCLA) workshop on Random and Dynamic Graphs and Networks; this is the fourth and fifth entry.

Rather than my usual format of summarizing the things that got me thinking during the last few days, I'm going to go with a more free-form approach.

Thursday began with Jennifer Chayes (MSR) discussing some analytical work on adapting convergence-in-distribution proof techniques to ensembles of graphs. She introduced the cut-norm graph distance metric (useful on dense graphs; says that they have some results for sparse graphs, but that it's more difficult for those). The idea of graph distance seems to pop up in many different areas (including several I've been thinking of) and is closely related to the GRAPH ISOMORPHISM problem (which is not known to be NP-complete, but nor is it known to be in P). For many reasons, it would be really useful to be able to calculate in polynomial time the minimum edge-edit distance between two graphs; this would open up a lot of useful techniques based on transforming one graph into another.

Friday began with a talk by Jeannette Janssen (Dalhousie University) on a geometric preferential attachment model, which is basically a geometric random graph but where nodes have a sphere of attraction (for new edges) that has volume proportional to the node's in-degree. She showed some very nice mathematical results on this model. I wonder if this idea could be generalized to arbitrary manifolds (with a distance metric on them) and attachment kernels. That is, imagine that our complex network has actually imbedded on some complicated manifold and the attachment is based on some function of the distance on that manifold between the two nodes. The trick would be then to infer both the structure of the manifold and the attachment function from real data. Of course, without some constraints on both features, it would be easy to construct an arbitrary pair (manifold and kernel) that would give you exactly the network you observed. Is it sufficient to get meaningful results that both should be relatively smooth (continuous, differentiable, etc.)?

Jeannette's talk was followed by Filippo Menczer's talk on mining traffic data from the Internet2/Abilene network. The data set was based on daily dumps of end-to-end communications (packet headers with client and server IDs anonymized) and looked at a variety of behaviors of this traffic. He used this data to construct interaction graphs betwen clients and servers, clients and applications (e.g., "web"), and a few other things. The analysis seems relatively preliminary in the sense that there are a lot of data issues that are lurking in the background (things like aggregated traffic from proxies, aliasing and masking effects, etc.) that make it difficult to translate conclusions about the traffic into conclusions about real individual users. But, fascinating stuff, and I'm looking forward to seeing what else comes out of that data.

The last full talk I saw was by Raissa D'Souza on competition-induced preferential attachment, and a little bit at the end on dynamic geometric graphs and packet routing on them. I've seen the technical content of the preferential attachment talk before, but it was good to have the reminder that power-law distributions are not necessarily the only game in town for heavy-tailed distributions, and that even though the traditional preferential attachment mechanism may not be a good model of the way real growing networks change, it may be that another mechanism that better models the real world can look like preferential attachment. This ties back to Sidney Redner's comment a couple of days before about the citation network: why does the network look like one grown by preferential attachment, when we know that's not how individual authors choose citations?

posted May 21, 2007 11:38 AM in Scientifically Speaking | permalink | Comments (4)

May 09, 2007

IPAM - Random and Dynamic Graphs and Networks (Day 3)

This week, I'm in Los Angeles for the Institute for Pure and Applied Mathematics' (IPAM, at UCLA) workshop on Random and Dynamic Graphs and Networks; this is the third of five entries based on my thoughts from each day. As usual, these topics are a highly subjective slice of the workshop's subject matter...

The impact of mobility networks on the worldwide spread of epidemics

I had the pleasure of introducing Alessandro Vespignani (Indiana University) for the first talk of the day on epidemics in networks, and his work in modeling the effect that particles (people) moving around on the airport network have on models of the spread of disease. I've seen most of this stuff before from previous versions of Alex's talk, but there were several nice additions. The one that struck the audience the most was a visualization of all of the individual flights over the space of a couple of days in the eastern United States; the animation was made by Aaron Koblin for a different project, but was still quite effective in conveying the richness of the air traffic data that Alex has been using to do epidemic modeling and forecasting.

On the structure of growing networks

Sidney Redner gave the pre-lunch talk about his work on the preferential attachment growing-network model. Using the master equation approach, Sid explored an extremely wide variety of properties of the PA model, such as the different regimes of degree distribution behavior for sub-, exact, and different kinds of super- linear attachment rates, the first-mover advantage in the network, the importance of initial degree in determining final degree, along with several variations on the initial model. The power of the master equation approach was clearly evident, I should really learn more about.

He also discussed his work analyzing 100 years of citation data from the Physical Review journal (about 350,000 papers and 3.5 million citations; in 1890, the average number of references in a paper was 1, while in 1990, the average number had increased to 10), particularly with respect to his trying to understand the evidence for linear preferential attachment as a model of citation patterns. Quite surprisingly, he showed that for the first 100 or so citations, papers in PR have nearly linear attachment rates. One point Sid made several times in his talk is that almost all of the results for PA models are highly sensitive to variations in the precise details of the attachment mechanism, and that it's easy to get something quite different (so, no power laws) without trying very hard.

Finally, a question he ended with is why does linear PA seem to be a pretty good model for how citations acrue to papers, even though real citation patterns are clearly not dictated by the PA model?

Panel discussion

The last talk-slot of the day was replaced by a panel discussion, put together by Walter Willinger and chaired by Mark Newman. Instead of the usual situation where the senior people of a field sit on the panel, this panel was composed of junior people (with the expectation that the senior people in the audience would talk anyway). I was asked to sit on the panel, along with Ben Olding (Harvard), Lea Popovic (Cornell), Leah Shaw (Naval Research Lab), and Lilit Yeghiazarian (UCLA). We each made a brief statement about what we liked about the workshop so far, and what kinds of open questions we would be most interested in seeing the community study.

For my on part, I mentioned many of the questions and themes that I've blogged about the past two days. In addition, I pointed out that function is more than just structure, being typically structure plus dynamics, and that our models currently do little to address the dynamics part of this equation. (For instance, can dynamical generative models of particular kinds of structure tell us more about why networks exhibit those structures specifically, and not some other variety?) Lea and Leah also emphasized dynamics as being a huge open area in terms of both modeling and mechanisms, with Lea pointing out that it's not yet clear what are the right kinds of dynamical processes that we should be studying with networks. (I made a quick list of processes that seem important, but only came up with two main caterogies, branching-contact-epidemic-percolation processes and search-navigation-routing processes. Sid later suggested that consensus-voting style processes, akin to the Ising model, might be another, although there are probably others that we haven't thought up yet.) Ben emphasized the issues of sampling, for instance, sampling subgraphs of our model, e.g., the observable WWW or even just the portion we can crawl in an afternoon, and dealing with sampling effects (i.e., uncertainty) in our models.

The audience had a lot to say on these and other topics, and particularly so on the topics of what statisticians can contribute to the field (and also why there are so few statisticians working in this area; some suggestions that many statisticians are only interested in proving asymptotic results for methods, and those that do deal with data are working on bio-informatics-style applications), and on the cultural difference between the mathematicians who want to prove nice things about toy models (folks like Christian Borgs, Microsoft Research) as a way of understanding the general propeties of networks and of their origin, and the empiricists (like Walter Willinger) who want accurate models of real-world systems that they can use to understand their system better. Mark pointed out that there's a third way in modeling, which relies on using an appropriately defined null model as a probe to explore the structure of your network, i.e., a null model that reproduces some of the structure you see in your data, but is otherwise maximally random, can be used to detect the kind of structure the model doesn't explain (so-called "modeling errors", in contrast to "measurement errors"), and thus be used in the standard framework of error modeling that science has used successfully in the past to understand complex systems.

All-in-all, I think the panel discussion was a big success, and the conversation certainly could have gone on well past the one-hour limit that Mark imposed.

posted May 9, 2007 11:38 PM in Scientifically Speaking | permalink | Comments (0)

May 08, 2007

IPAM - Random and Dynamic Graphs and Networks (Day 2)

This week, I'm in Los Angeles for the Institute for Pure and Applied Mathematics' (IPAM, at UCLA) workshop on Random and Dynamic Graphs and Networks; this is the second of five entries based on my thoughts from each day. As usual, these topics are a highly subjective slice of the workshop's subject matter...

Biomimetic searching strategies

Massimo Vergassola (Institut Pasteur) started the day with an interesting talk that had nothing to do with networks. Massimo discussed the basic problem of locating a source of smelly molecules in the macroscopic world where air currents cause pockets of the smell to be sparsely scattered across a landscape, thus spoiling the chemotaxis (gradient ascent) strategy used by bacteria, and a clever solution for it (called "infotaxis") based on trading off exploration and exploitation via an adaptive entropy minimization strategy.

Diversity of graphs with highly variable connectivity

Following lunch, David Alderson (Naval Postgraduate School) described his work with Lun Li (Caltech) on understanding just how different networks with a given degree distribution can be from each other. The take-home message of Dave's talk is, essentially, that the degree distribution is a pretty weak constraint on other patterns of connectivity, and is not a sufficient statistical characterization of the global structure of the network with respect to many (most?) of the other (say, topological and functional) aspects we might care about. Although he focused primarily on degree assortativity, the same kind of analysis could in principle be done for other network measures (clustering coefficient, distribution, diameter, vertex-vertex distance distribution, etc.), none of which are wholly independent of the degree distribution, or of each other! (I've rarely seen the interdependence of these measures discussed (mentioned?) in the literature, even though they are often treated as such.)

In addition to describing his numerical experiments, Dave sounded a few cautionary notes about the assumptions that are often made in the complex networks literature (particularly by theoreticians using random-graph models) on the significance of the degree distribution. For instance, the configration model with a power-law degree sequence (and similarly, graphs constructed via preferential attachment) yields networks that look almost nothing like any real-world graph that we know, except for making vaguely similar degree distributions, and yet they are often invoked as reasonable models of real-world systems. In my mind, it's not enough to simply fix-up our existing random-graph models to instead define an ensemble with a specific degree distribution, and a specific clustering coefficient, and a diameter, or whatever our favorite measures are. In some sense all of these statistical measures just give a stylized picture of the network, and will always be misleading with respect to other important structural features of real-world networks. For the purposes of proving mathematical theorems, I think these simplistic toy models are actually very necessary -- since their strong assumptions make analytic work significantly easier -- so long as we also willfully acknowledge that they are a horrible model of the real world. For the purposes of saying something concrete about real networks, we need more articulate models, and, probably, models that are domain specific. That is, I'd like a model of the Internet that respects the idiosyncracies of this distributed engineered and evolving system; a model of metabolic networks that respects the strangeness of biochemistry; and a model of social networks that understands the structure of individual human interactions. More accurately, we probably need models that understand the function that these networks fulfill, and respect the dynamics of the network in time.

Greedy search in social networks

David Liben-Nowell (Carleton College) then closed the day with a great talk on local search in social networks. The content of this talk largely mirrored that of Ravi Kumar's talk at GA Tech back in January, which covered an empirical study of the distribution of the (geographic) distance covered by friendship links in the LiveJournal network (from 2003, when it had about 500,000 users located in the lower 48 states). This work combined some nice data analysis with attempts to validate some of the theoretical ideas due to Kleinberg for locally navigable networks, and a nice generalization of those ideas to networks with non-uniform population distributions.

An interesting point that David made early in his talk was that homophily is not sufficient to explain the presense of either the short paths that Milgrams' original 6-degrees-of-seperation study demonstrated, or even the existence of a connected social graph! That is, without a smoothly varying notion of "likeness", then homophily would lead us to expect disconnected components in the social network. If both likeness and the population density in the likeness space varied smoothly, then a homophilic social web would cover the space, but the average path length would be long, O(n). In order to get the "small world" that we actually observe, we need some amount of non-homophilic connections, or perhaps multiple kinds of "likeness", or maybe some diversity in the preference functions that individuals use to link to each other. Also, it's still not clear what mechanism would lead to the kind of link-length distribution predicted by Kleinberg's model of optimally navigable networks - an answer to this question would, presumably, tell us something about why modern societies organize themselves the way they do.

posted May 8, 2007 10:52 PM in Scientifically Speaking | permalink | Comments (0)

May 07, 2007

IPAM - Random and Dynamic Graphs and Networks (Day 1)

This week, I'm in Los Angeles for the Institute for Pure and Applied Mathematics' (IPAM, at UCLA) workshop on random and dynamic graphs and networks. This workshop is the third of four in their Random Shapes long program. The workshop has the usual format, with research talks throughout the day, punctuated by short breaks for interacting with your neighbors and colleagues. I'll be trying to do the same for this event as I did for the DIMACS workshop I attended back in January, which is to blog each day about interesting ideas and topics. As usual, this is a highly subjective slice of the workshop's subject matter.

Detecting and understanding the large-scale structure of networks

Mark Newman (U. Michigan) kicked off the morning by discussing his work on clustering algorithms for networks. As he pointed out, in the olden days of network analysis (c. 30 years ago), you could write down all the nodes and edges in a graph and understand its structure visually. These days, our graphs are too big for this, and we're stuck using statistical probes to understand how these things are shaped. And yet, many papers include figures of networks as incoherent balls of nodes and edges (Mark mentioned that Marc Vidal calls these figures "ridiculograms").

I've seen the technical content of Mark's talk before, but he always does an excellent job of making it seem fresh. In this talk, there was a brief exchange with the audience regarding the NP-completeness of the MAXIMUM MODULARITY problem, which made me wonder what exactly are the kind of structures that would make an instance of the MM problem so hard. Clearly, polynomial time algorithms that approximate the maximum modularity Q exist because we have many heuristics that work well on (most) real-world graphs. But, if I was an adversary and wanted to design a network with particularly difficult structure to partition, what kind would I want to include? (Other than reducing another NPC problem using gadgets!)

Walter Willinger raised a point here (and again in a few later talks) about the sensitivity of most network analysis methods to topological uncertainty. That is, just about all the techniques we have available to us assume that the edges as given are completely correct (no missing or spurious edges). Given the classic result due to Watts and Strogatz (1998) of the impact that a few random links added to a lattice have on the diameter of the graph, it's clear that in some cases, topological errors can have a huge impact on our conclusions about the network. So, developing good ways to handle uncertainty and errors while analyzing the structure of a network is a rather large, gaping hole in the field. Presumably, progress in this area will require having good error models of our uncertainty, which, necessary, depend on the measurement techniques used to produce the data. In the case of traceroutes on the Internet, this kind of inverse problem seems quite tricky, but perhaps not impossible.

Probability and Spatial Networks

David Aldous (Berkeley) gave the second talk and discussed some of his work on spatial random graphs, and, in particular, on the optimal design and flow through random graphs. As an example, David gave us a simple puzzle to consider:

Given a square of area N with N nodes distributed uniformly at random throughout. Now, subdivided this area into L^2 subsquares, and choose one node in each square to be a "hub." Then, connect each of the remaining nodes in a square to the hub, and connect the hubs together in a complete graph. The question is, what is the size L that minimizes the total (Euclidean) length of the edges in this network?

He then talked a little about other efficient ways to connect up uniformly scattered points in an area. In particular, Steiner trees are the standard way to do this, and have a cost O(N). The downside for this efficiency is that the tree-distance between physically proximate points on the plane is something polynomial in N (David suggested that he didn't have a rigorous proof for this, but it seems quite reasonable). As it turns out, you can dramatically lower this cost by adding just a few random lines across the plane -- the effect is analagous to the one in the Watts-Strogatz model. Naturally, I was thinking about the structure of real road networks here, and it would seem that the effect of highways in the real world is much the same as David's random lines. That is, it only takes a few of these things to dramatically reduce the topological distance between arbitrary points. Of course, road networks have other things to worry about, such as congestion, that David's highways don't!

posted May 7, 2007 11:49 PM in Scientifically Speaking | permalink | Comments (1)

March 08, 2007

Computer science vs. Computer programming

Suresh blogs about a favorite topic (and hobby horse) of mine: the image problem that Computer Science has over computer programming. Programming is really just a generalization of calculation, so to think that Computer Science is primarily about programming is like thinking Mathematics is primarily about punching numbers into an equation, or rearranging symbols on the page until you get a particular form. Of course, that's probably what most people in the world think math is about. It wasn't until college that I realized that Mathematics was more than calculation, or that Computer Science was more than just programming. Naturally, computer scientists are annoyed by this situation, and, if they were more inclined to self-ridicule, they might call it the CS vs CP problem: Computer Science vs. Computer Programming [1].

On a related point, recently, I've been interacting with a hard-working and talented high school student who isn't sure what she wants to study in college. Much to my delight, I frequently find myself connecting problems she's interested in with topics in theoretical Computer Science. For instance, just today, we talked about random numbers. She was amazed to learn that the P vs. NP question connects deeply with the question of whether strong pseudo-random number generators exist [2,3], and also that a sender and receiver can use a common pseudo-random number generator to make their conversation difficult to follow [4].

-----

[1] Sadly, the US Government also seems to think that CS = CP. When they say that demand for degrees in computer science is forecast to grow dramatically in the next 20 years, they don't mean that we'll need more algorithms people; they mean more IT people.

[2] The P vs NP question is one of the most important questions in all of mathematics, and the Clay Institute offers a cool $1 million for solving it, as one of its Millennium Questions. (My advisor likes to say that if you could show that P = NP, then you'd make a lot more than $1 million off the patents, and playing the stock market, among other things. The startling thing is that even this description underestimates this questions significance.)

[3] There are several great introductions to the P vs NP question, and many of them touch on the connection with random numbers. This one (starting on page 34, but see pages 37-38 in particular) is one that I just found via Google.

[4] Most scientists think of the pseudo-randomness of random number generators as either being a nuisance, or only being useful to replicate old results. Engineers - such a clever lot - use it to make many modern communication protocols more robust by hopping pseudo-randomly among a set of allowed frequencies.

posted March 8, 2007 08:37 PM in Computer Science | permalink | Comments (1)

February 16, 2007

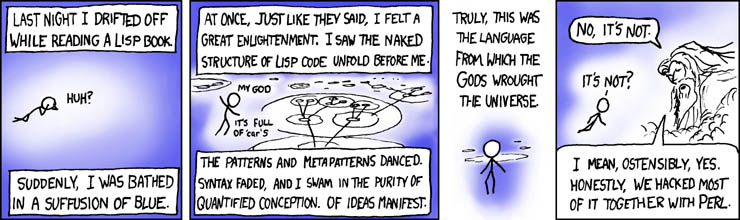

Greatest fear

A scientist's greatest fear...

Courtesy of xkcd.

posted February 16, 2007 11:43 AM in Humor | permalink | Comments (0)

February 15, 2007

Fast-modularity made really fast

The other day on the arxiv mailing, a very nice paper appeared (cs.CY/0702048) that optimizes the performance of the fast-modularity algorithm that I worked on with Newman and Moore several years ago. Our algorithm's best running time was O(n log^2 n) on sparse graphs with roughly balanced dendrograms, and we applied it to a large network of about a half million vertices (my implementation is available here).

Shortly after we posted the paper on the arxiv, I began studying its behavior on synthetic networks to understand whether a highly right-skewed distribution of community sizes in the final partition was a natural feature of the real-world network we studied, or whether it was caused by the algorithm itself [1]. I discovered that the distribution probably was not entirely a natural feature because the algorithm almost always produces a few super-communities, i.e., clusters that contain a large fraction of the entire network, even on synthetic networks with no significant community structure. For instance, in the network we analyzed, the top 10 communities account for 87% of the vertices.

Wakita and Tsurumi's paper begins with this observation and then shows that the emergence of these super-communities actually slows the algorithm down considerably, making the running time more like O(n^2) than we would like. They then show that by forcing the algorithm to prefer to merge communities of like sizes - and thus guaranteeing that the dendrogram it constructs will be fairly balanced - the algorithm achieves the bound of essentially linear running time that we proved in our paper. This speed-up yields truly impressive results - they cluster a 4 million node network in about a half an hour - and I certainly hope they make their implementation available to the public. If I have some extra time (unlikely), I may simply modify my own implementation. (Alternatively, if someone would like to make that modification, I'm happy to host their code on this site.)

Community analysis algorithm proposed by Clauset, Newman, and Moore (CNM algorithm) finds community structure in social networks. Unfortunately, CNM algorithm does not scale well and its use is practically limited to networks whose sizes are up to 500,000 nodes. The paper identifies that this inefficiency is caused from merging communities in unbalanced manner. The paper introduces three kinds of metrics (consolidation ratio) to control the process of community analysis trying to balance the sizes of the communities being merged. Three flavors of CNM algorithms are built incorporating those metrics. The proposed techniques are tested using data sets obtained from existing social networking service that hosts 5.5 million users. All the methods exhibit dramatic improvement of execution efficiency in comparison with the original CNM algorithm and shows high scalability. The fastest method processes a network with 1 million nodes in 5 minutes and a network with 4 million nodes in 35 minutes, respectively. Another one processes a network with 500,000 nodes in 50 minutes (7 times faster than the original algorithm), finds community structures that has improved modularity, and scales to a network with 5.5 million.

K. Wakita and T. Tsurumi, "Finding Community Structure in Mega-scale Social Networks." e-print (2007) cs.CY/0702048

Update 14 April 2008: Ken Wakita tells me that their code is now publicly available online.

-----

[1] Like many heuristics, fast-modularity achieves its speed by being highly biased in the set of solutions it considers. See footnote 7 in the previous post. So, without knowing more about why the algorithm behaves in the way it does, a number of things are not clear, e.g., how close to the maximum modularity the partition it returns is, how sensitive its partition is to small perturbations in the input (removing or adding an edge), whether supplementary information such as the dendrogram formed by the sequence of agglomerations is at all meaningful, whether there is an extremely different partitioning with roughly the same modularity, etc. You get the idea. This is why it's wise to be cautious in over-interpreting the output of these biased methods.

posted February 15, 2007 08:23 AM in Computer Science | permalink | Comments (0)

January 25, 2007

DIMACS - Complex networks and their applications (Day 3)

The third day of the workshop focused on applications to biochemical networks (no food webs), with a lot of that focus being on the difficulties of taking fuzzy biological data (e.g., gene expression data) and converting it into an accurate and meaningful form for further analysis or for hypothesis testing. Only a few of the talks were theoretical, but this perhaps reflects the current distribution of focus in biology today. After the workshop was done, I wondered just how much information crossed between the various disciplines represented at the workshop - certainly, I came away from it with a few new ideas, and a few new insights from the good talks I attended. And I think that's the sign of a successful workshop.

Complex Networks in Biology

Chris Wiggins (Columbia) delivered a great survey of interesting connections between machine learning and biochemical networks. It's probably fair to say that biologists are interested in constructing an understanding of cellular-level systems that compares favorably to an electrical engineer's understanding of circuits (Pointer: Can a Biologist Fix a Radio?). But, this is hard because living stuff is messy, inconsistent in funny ways, and has a tendency to change while you're studying it. So, it's harder to get a clean view of what's going on under the hood than it was with particle physics. This, of course, is where machine learning is going to save us - ML offers powerful and principled ways to sift through (torture) all this data.

The most interesting part of his talk, I think, was his presentation of NetBoost, a mechanism discriminator that can tell you which (among a specific suite of existing candidates) is the most likely to have generated your observed network data [1]. For instance, was it preferential attachment (PA) or duplication-mutation-complementation (DMC) that produced a given protein-interaction network (conclusion: the latter is better supported). The method basically works by constructing a decision tree that looks at the subgraph decomposition of a network and scores it's belief that each of the various mechanisms produced it [2]. With the ongoing proliferation of network mechanisms (theorists really don't have enough to do these days), this kind of approach serves as an excellent way to test a new mechanism against the data it's supposed to be emulating.

One point Chris made that resonated strongly with me - and which Cris and Mark made yesterday - is the problem with what you might call "soft validation" [3]. Typically, a study will cluster or do some other kind of analysis with the data, and then tell a biological story about why these results make sense. On the other hand, forcing the clustering to make testable predictions would be a stronger kind of validation.

Network Inference and Analysis for Systems Biology

Just before lunch, Joel Bader (Johns Hopkins) gave a brief talk about his work on building a good view of the protein-protein interaction network (PPIN). The main problems with this widely studied data are the high error rate, both for false positives (interactions that we think exist, but don't) and false negatives (interactions that we think don't exist, but do). To drive home just how bad the data is, he pointed out that two independent studies of the human PPIN showed just 1% overlap in the sets of "observed" interactions.

He's done a tremendous amount of work on trying to improve the accuracy of our understanding of PPINs, but here he described a recent approach that fits degree-based generative models [4] to the data using our old friend expectation-maximization (EM) [5]. His results suggest that we're seeing about 30-40% of the real edges, but that our false positive rate is about 10-15%. This is a depressing signal-to-noise ratio (roughly 1%), because the number of real interactions is O(n), while our false positive rate is O(n^2). Clearly, the biological methods used to infer the interactions need to be improved before we have a clear idea of what this network looks like, but it also suggests that a lot of the previous results on this network are almost surely wrong. Another question is whether it's possible to incorporate these kinds of uncertainties into our analyses of the network structure.

Activating Interaction Networks and the Dynamics of Biological Networks

Meredith Betterton (UC-Boulder) presented some interesting work on signaling and regulatory networks. One of the more surprising tidbits she used in her motivation is the following. In yeast, the mRNA transcription undergoes a consistent 40-minute genome-wide oscillation, but when exposed to an antidepressant (in this case, phenelzine), the period doubles [6]. (The fact that gene expression oscillates like this poses another serious problem for the results of gene expression analysis that doesn't account for such oscillations.)

The point Meredith wanted to drive home, though, was we shouldn't just think of biochemical networks as static objects - they also represent the form that the cellular dynamics must follow. Using a simple dynamical model of activation and inhibition, she showed that the structure (who points to who, and whether an edge inhibits or activates its target) of a real-world circadian rhythm network and a real-world membrane-based signal cascade basically behave exactly as you would expect - one oscillates and the other doesn't. But, then she showed that it only takes a relatively small number of flips (activation to inhibition, or vice versa) to dramatically change the steady-state behavior of these cellular circuits. In a sense, this suggests that these circuits are highly adaptable, given a little pressure.

There are several interesting questions that came to mind while she was presenting. For instance, if we believe there are modules within the signaling pathways that accomplish a specific function, how can we identify them? Do sparsely connected dense subgraphs (assortative community structure) map onto these functional modules? What are the good models for understanding these dynamics, systems of differential equations, discrete time and matrix multiplication, or something more akin to a cellular version of Ohm's Law? [7]

-----

[1] M. Middendorf, E. Ziv and C. Wiggins, "Inferring Network Mechanisms: The Drosophila melanogaster Protein Interaction Network." PNAS USA 102 (9), 3192 (2005).

[2] Technically, it's using these subgraphs as generic features and then crunching the feature vectors from examples of each mechanism through a generalized decision tree in order to learn how to discriminate among them. Boosting is used within this process in order to reduce the error rates. The advantage of this approach to model selection and validation, as Chris pointed out, is that it doesn't assume a priori which features (e.g., degree distribution, clustering coefficient, distance distribution, whatever) are interesting, but rather chooses the ones that can actually discriminate between things we believe are different.

[3] Chris called it "biological validation," but the same thing happens in sociology and Internet modeling, too.

[4] I admit that I'm a little skeptical of degree-based models of these networks, since they seem to assume that we're getting the degree distribution roughly right. That assumption is only reasonable if our sampling of the interactions attached to a particular vertex is unbiased, which I'm not sure about.

[5] After some digging, I couldn't find the reference for this work. I did find this one, however, which illustrates a different technique for a related problem. I. Iossifov et al., "Probabilistic inference of molecular networks from noisy data sources."

[6] C. M. Li and R. R. Klevecz, "A rapid genome-scale response of the transcriptional oscillator to perturbation reveals a period-doubling path to phenotypic change." PNAS USA 103 (44), 16254 (2006).

[7] Maribeth Oscamou pointed out to me during the talk that any attempt to construct such rules have to account for processes like the biochemical degradation of the signals. That is, unlike electric circuits, there's no strict conservation of the "charge" carrier.

posted January 25, 2007 01:20 PM in Scientifically Speaking | permalink | Comments (0)

January 24, 2007

DIMACS - Complex networks and their applications (Day 2)

There were several interesting talks today, or rather, I should say that there were several talks today that made me think about things beyond just what the presenters said. Here's a brief recap of the ones that made me think the most, and some commentary about what I thought about. There were other good talks today, too. For instance, I particularly enjoyed Frank McSherry's talk on doing PageRank on his laptop. There was also one talk on power laws and scale-free graphs that stimulated a lot of audience, ah, interaction - it seems that there's a lot of confusion both over what a scale-free graph is (admittedly the term has no consistent definition in the literature, although there have been some recent attempts to clarify it in a principled manner), and how to best show that some data exhibit power-law behavior. Tomorrow's talks will be more about networks in various biological contexts.

Complex Structures in Complex Networks

Mark Newman's (U. Michigan) plenary talk mainly focused on the importance of having good techniques to extract information from networks, and being able to do so without making a lot of assumptions about what the technique is supposed to look for. That is, rather than assume that some particular kind of structure exists and then look for it in our data, why not let the data tell you what kind of interesting structure it has to offer? [1] The tricky thing about this approach to network analysis, though, is working out a method that is flexible enough to find many different kinds of structure, and to present only that which is unusually strong. (Point to ponder: what should we mean by "unusually strong"?) This point was a common theme in a couple of the talks today. The first example that Mark gave of a technique that has this nice property was a beautiful application of spectral graph theory to the task of find a partition of the vertices that give an extremal value of modularity. If we ask for the maximum modularity, this heuristic method [2], using the positive eigenvalues of the resulting solution, gives us a partition with very high modularity. But, using the negative eigenvalues gives a partition that minimizes the modularity. I think we normally think of modules meaning assortative structures, i.e., sparsely connected dense subgraphs. But, some networks exhibit modules that are approximately bipartite, i.e., they are disassortative, being densely connected sparse subgraphs. Mark's method naturally allows you to look for either. The second method he presented was a powerful probabilistic model of node clustering that can be appropriately parameterized (fitted to data) via expectation-maximization (EM). This method can be used to accomplish much the same results as the previous spectral method, except that it can look for both assortative and disassortative structure simultaneously in the same network.

Hierarchical Structure and the Prediction of Missing Links