September 09, 2011

What is the probability of a 9/11-size terrorist attack?

Sunday is the 10-year anniversary of the 9/11 terrorist attacks. As a commemoration of the day, I'm going to investigate answers to a very simple question: what is the probability of a 9/11-size or larger terrorist attack?

There are many ways we could try to answer this question. Most of them don't involve using data, math and computers (my favorite tools), so we will ignore those. Even using quantitative tools, approaches differ based on how strong are the assumptions they make about the social and political processes that generate terrorist attacks. We'll come back to this point throughout the analysis.

Before doing anything new, it's worth repeating something old. For the better part of the past 8 years that I've been studying the large-scale patterns and dynamics of global terrorism (see for instance, here and here), I've emphasized the importance of taking an objective approach to the topic. Terrorist attacks may seem inherently random or capricious or even strategic, but the empirical evidence demonstrates that there are patterns and that these patterns can be understood scientifically. Earthquakes and seismology serves as an illustrative example. Earthquakes are extremely difficult to predict, that is, to say beforehand when, where and how big they will be. And yet, plate tectonics and geophysics tells us a great deal about where and why they happen and the famous Gutenberg-Richter law tells us roughly how often quakes of different sizes occur. That is, we're quite good at estimating the long-time frequencies of earthquakes because larger scales allows us to leverage a lot of empirical and geological data. The cost is that we lose the ability to make specific statements about individual earthquakes, but the advantage is insight into the fundamental patterns and processes.

The same can be done for terrorism. There's now a rich and extensive modern record of terrorist attacks worldwide [1], and there's no reason we can't mine this data for interesting observations about global patterns in the frequencies and severities of terrorist attacks. This is where I started back in 2003 and 2004, when Maxwell Young and I started digging around in global terrorism data. Catastrophic events like 9/11, which (officially) killed 2749 people in New York City, might seem so utterly unique that they must be one-off events. In their particulars, this is almost surely true. But, when we look at how often events of different sizes (number of fatalities) occur in the historical record of 13,407 deadly events worldwide [2], we see something remarkable: their relative frequencies follow a very simple pattern.

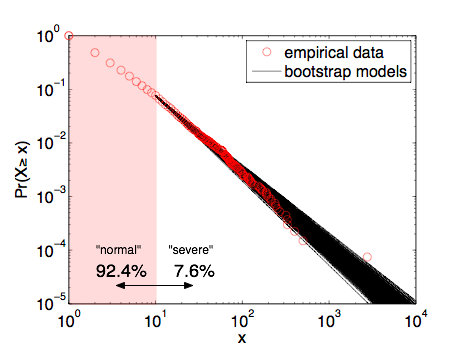

The figure shows the fraction of events that killed at least x individuals, where I've divided them into "severe" attacks (10 or more fatalities) and "normal" attacks (less than 10 fatalities). The lions share (92.4%) of these events are of the "normal" type, killing less than 10 individuals, but 7.6% are "severe", killing 10 or more. Long-time readers have likely heard this story before and know where it's going. The solid line on the figure shows the best-fitting power-law distribution for these data [3]. What's remarkable is that 9/11 is very close to the curve, suggesting that statistically speaking, it is not an outlier at all.

A first estimate: In 2009, the Department of Defense received the results of a commissioned report on "rare events", with a particular emphasis on large terrorist attacks. In section 3, the report walks us through a simple calculation of the probability of a 9/11-sized attack or larger, based on my power-law model. It concludes that there was a 23% chance of an event that killed 2749 or more between 1968 and 2006. [4] The most notable thing about this calculation is that its magnitude makes it clear that 9/11 should not be considered a statistical outlier on the basis of its severity.

How we can we do better: Although probably in the right ballpark, the DoD estimate makes several strong assumptions. First, it assumes that the power-law model holds over the entire range of severities (that is x>0). Second, it assumes that the model I published in 2005 is perfectly accurate, meaning both the parameter estimates and the functional form. Third, it assumes that events are generated independently by a stationary process, meaning that the production rate of events over time has not changed nor has the underlying social or political processes that determine the frequency or severity of events. We can improve our estimates by improving on these assumptions.

A second estimate: The first assumption is the easiest to fix. Empirically, 7.6% of events are "severe", killing at least 10 people. But, the power-law model assumed by the DoD report predicts that only 4.2% of events are severe. This means that the DoD model is underestimating the probability of a 9/11-sized event, that is, the 23% estimate is too low. We can correct this difference by using a piecewise model: with probability 0.076 we generate a "severe" event whose size is given by a power-law that starts at x=10; otherwise we generate a "normal" event by choosing a severity from the empirical distribution for 0 < x < 10 . [5] Walking through the same calculations as before, this yields an improved estimate of a 32.6% chance of a 9/11-sized or larger event between 1968-2008.

A third estimate: The second assumption is also not hard to improve on. Because our power-law model is estimated from finite empirical data, we cannot know the alpha parameter perfectly. Our uncertainty in alpha should propagate through to our estimate of the probability of catastrophic events. A simple way to capture this uncertainty is to use a computational bootstrap resampling procedure to generate many synthetic data sets like our empirical one. Estimating the alpha parameter for each of these yields an ensemble of models that represents our uncertainty in the model specification that comes from the empirical data.

This figure overlays 1000 of these bootstrap models, showing that they do make slightly different estimates of the probability of 9/11-sized events or larger. As a sanity check, we find that the mean of these bootstrap parameters is alpha=2.397 with a standard deviation of 0.043 (quite close to the 2.4+/-0.1 value I published in 2009 [6]). Continuing with the simulation approach, we can numerically estimate the probability of a 9/11-sized or larger event by drawing synthetic data sets from the models in the ensemble and then asking what fraction of those events are 9/11-sized or larger. Using 10,000 repetitions yields an improved estimate of 40.3%.

Some perspective: Having now gone through three calculations, it's notable that the probability of a 9/11-sized or larger event has almost doubled as we've improved our estimates. There are still additional improvements we could do, however, and these might push the number back down. For instance, although the power-law model is a statistically plausible model of the frequency-severity data, it's not the only such model. Alternatives like the stretched exponential or the log-normal decay faster than the power law, and if we were to add them to the ensemble of models in our simulation, they would likely yield 9/11-sized or larger events with lower frequencies and thus likely pull the probability estimate down somewhat. [7]

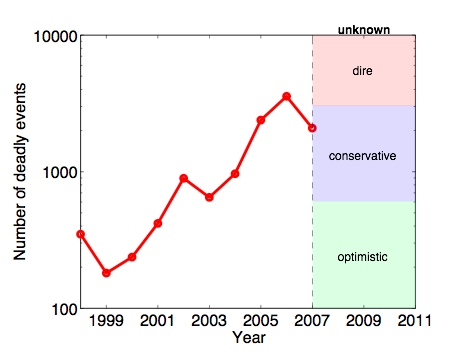

Peering into the future: Showing that catastrophic terrorist attacks like 9/11 are not in fact statistical outliers given the sheer magnitude and diversity of terrorist attacks worldwide over the past 40 years is all well and good, you say. But, what about the future? In principle, these same models could be easily used to make such an estimate. The critical piece of information for doing so, however, is a clear estimate of the trend in the number of events each year. The larger that number, the greater the risk under these models of severe events. That is, under a fixed model like this, the probability of catastrophic events is directly related to the overall level of terrorism worldwide. Let's look at the data.

Do you see a trend here? It's difficult to say, especially with the changing nature of the conflicts in Iraq and Afghanistan, where many of the terrorist attacks of the past 8 years have been concentrated. It seems unlikely, however, that we will return to the 2001 levels (200-400 events per year; the optimist's scenario). A dire forecast would have the level continue to increase toward a scary 10,000 events per year. A more conservative forecast, however, would have the rate continue as-is relative to 2007 (the last full year for which I have data), or maybe even decrease to roughly 1000 events per year. Using our estimates from above, 1000 events overall would generate about 75 "severe" events (more than 10 fatalities) per year. Plugging this number into our computational model above (third estimate approach), we get an estimate of roughly a 3% chance of a 9/11-sized or larger attack each year, or about a 30% chance over the next decade. Not a certainty by any means, but significantly greater than is comfortable. Notably, this probability is in the same ballpark for our estimates for the past 40 years, which goes to show that the overall level of terrorism worldwide has increased dramatically during those decades.

It bears repeating that this forecast is only as good as the models on which it is based, and there are many things we still don't know about the underlying social and political processes that generate events at the global scale. (In contrast to the models the National Hurricane Center uses to make hurricane track forecasts.) Our estimates for terrorism all assume a homogeneous and stationary process where event severities are independent random variables, but we would be foolish to believe that these assumptions are true in the strong sense. Technology, culture, international relations, democratic movements, urban planning, national security, etc. are all poorly understood and highly non-stationary processes that could change the underlying dynamics in the future, making our historical models less reliable than we would like. So, take these estimates for what they are, calculations and computations using reasonable but potentially wrong assumptions based on the best historical data and statistical models currently available. In that sense, it's remarkable that these models do as well as they do in making fairly accurate long-term probabilistic estimates, and it seems entirely reasonable to believe that better estimates can be had with better, more detailed models and data.

Update 9 Sept. 2011: In related news, there's a piece in the Boston Globe (free registration required) about the impact 9/11 had on what questions scientists investigate that discusses some of my work.

-----

[1] Estimates differ between databases, but the number of domestic or international terrorist attacks worldwide between 1968 and 2011 is somewhere in the vicinity of 50,000-100,000.

[2] The historical record here is my copy of the National Memorial Institute for the Prevention of Terrorism (MIPT) Terrorism Knowledge Base, which stores detailed information on 36,018 terrorist attacks worldwide from 1968 to 2008. Sadly, the Department of Homeland Security pulled the plug on the MIPT data collection effort a few years ago. The best remaining data collection effort is the one run by the University of Maryland's National Consortium for the Study of Terrorism and Response to Terrorism (START) program.

[3] For newer readers: a power-law distribution is a funny kind of probability distribution function. Power laws pop up all over the place in complex social and biological systems. If you'd like an example of how weird power-law distributed quantities can be, I highly recommend Clive Crook's 2006 piece in The Atlantic title "The Height of Inequality" in which he considers what the world would look like if human height were distributed as unequally as human wealth (a quantity that is very roughly power-law-like).

[4] If you're curious, here's how they did it. First, they took the power-law model and the parameter value I estimated (alpha=2.38) and computed the model's complementary cumulative distribution function. The "ccdf" tells you the probability of observing an event at least as large as x, for any choice of x. Plugging in x=2749 yields p=0.0000282. This gives the probability of any single event being 9/11-sized or larger. The report was using an older, smaller data set with N=9101 deadly events worldwide. The expected number of these events 9/11-sized or larger is then p*N=0.257. Finally, if events are independent then the probability that we observe at least one event 9/11-sized or larger in N trials is 1-exp(-p*N)=0.226. Thus, about a 23% chance.

[5] This changes the calculations only slightly. Using alpha=2.4 (the estimate I published in 2009), given that a "severe" event happens, the probability that it is at least as large as 9/11 is p=0.00038473 and there were only N=1024 of them from 1968-2008. Note that the probability is about a factor of 10 larger than the DoD estimate while the number of "severe" events is about a factor of 10 smaller, which implies that we should get a probability estimate close to theirs.

[6] In "Power-law distributions in empirical data," SIAM Review 51(4), 661-703 (2009), with Cosma Shalizi and Mark Newman.

[7] This improvement is mildly non-trivial, so perhaps too much effort for an already long-winded blog entry.

posted September 9, 2011 02:17 PM in Terrorism | permalink | Comments (1)

November 18, 2010

Algorithms, numbers and quantification

On the plane back from Europe the other day I was reading this month's Atlantic Monthly and happened across a little piece by Alexis Madrigal called "Take the Data Out of Dating" about OkCupid's clever use of algorithms to increase the frequency of "three-ways" (which in dating-website-speak means a person sent a note, received a reply, and fired off a follow-up; not exactly a direct measure of their success at helping people find love, but that's their proxy of choice). It's a thoughtful piece largely because the punch line resonates with much of my recent feelings about the creeping use of scientometrics in the attempts of higher eduction administrators to understand what exactly their faculty have done or not done, and how they compare to their peers. (I could list a dozen other ways numbers are increasingly invading decision-making processes that used to be done based on principles and qualities, but ack there are so many.) More generally, I think it puts in a good perspective what exactly we lose when we focus on using numbers or algorithms to automate decisions about inherently human problems. Here it is:

Algorithms are made to restrict the amount of information the user sees—that’s their raison d'etre. By drawing on data about the world we live in, they end up reinforcing whatever societal values happen to be dominant, without our even noticing. They are normativity made into code—albeit a code that we barely understand, even as it shapes our lives.

We’re not going to stop using algorithms. They’re too useful. But we need to be more aware of the algorithmic perversity that’s creeping into our lives. The short-term fit of a dating match or a Web page doesn’t measure the long-term value it may hold. Statistically likely does not mean correct, or just, or fair. Google-generated kadosh [ed: best choice] is meretricious, offering a desiccated kind of choice. It’s when people deviate from what we predict they’ll do that they prove they are individuals, set apart from all others of the human type.

posted November 18, 2010 10:41 AM in Thinking Aloud | permalink | Comments (1)

October 27, 2010

Story-telling, statistics, and other grave insults

The New York Times (and the NYT Magazine) has been running a series of pieces about math, science and society written by John Allen Paulos, a mathematics professor at Temple University and author of several popular books. His latest piece caught my eye because it's a topic close to my heart: stories vs. statistics. That is, when we seek to explain something [1], do we use statistics and quantitative arguments using mainly numbers or do we use stories and narratives featuring actors, motivations and conscious decisions? [2] Here are a few good excerpts from Paulos's latest piece:

...there is a tension between stories and statistics, and one under-appreciated contrast between them is simply the mindset with which we approach them. In listening to stories we tend to suspend disbelief in order to be entertained, whereas in evaluating statistics we generally have an opposite inclination to suspend belief in order not to be beguiled. A drily named distinction from formal statistics is relevant: we’re said to commit a Type I error when we observe something that is not really there and a Type II error when we fail to observe something that is there. There is no way to always avoid both types, and we have different error thresholds in different endeavors, but the type of error people feel more comfortable may be telling.

...

I’ll close with perhaps the most fundamental tension between stories and statistics. The focus of stories is on individual people rather than averages, on motives rather than movements, on point of view rather than the view from nowhere, context rather than raw data. Moreover, stories are open-ended and metaphorical rather than determinate and literal.

It seems to me that for science, the correct emphasis should be on the statistics. That is, we should be more worried about observing something that is not really there. But as humans, statistics is often too dry and too abstract for us to understand intuitively, to generate that comfortable internal feeling of understanding. Thus, our peers often demand that we give not only the statistical explanation but also a narrative one. Sometimes, this can be tricky because the structure of the two modes of explanation are in fundamental opposition, for instance, if the narrative must include notions of randomness or stochasticity. In such a case, there is no reason for any particular outcome, only reasons for ensembles or patterns of outcomes. The idea that things can happen for no reason is highly counter intuitive [3], and yet in the statistical sciences (which is today essentially all sciences), this is often a critical part of the correct explanation [4]. For the social sciences, I think this is an especially difficult balance to strike because our intuition about how the world works is built up from our own individual-level experiences, while many of the phenomena we care about are patterns above that level, at the group or population levels [5].

This is not a new observation and it is not a tension exclusive to the social sciences. For instance, here is Stephen J. Gould (1941-2002), the eminent American paleontologist, speaking about the differences between microevolution and macroevolution (excerpted from Ken McNamara's "Evolutionary Trends"):

In Flatland, E.A. Abbot's (1884) classic science-fiction fable about realms of perception, a sphere from the world of three dimensions enters the plane of two-dimensional Flatland (where it is perceived as an expanding circle). In a notable scene, he lifts a Flatlander out of his own world and into the third dimension. Imagine the conceptual reorientation demanded by such an utterly new and higher-order view. I do not suggest that the move from organism to species could be nearly so radical, or so enlightening, but I do fear that we have missed much by over reliance on familiar surroundings.

An instructive analogy might be made, in conclusion, to our successful descent into the world of genes, with resulting insight about the importance of neutralism in evolutionary change. We are organisms and tend to see the world of selection and adaptation as expressed in the good design of wings, legs, and brains. But randomness may predominate in the world of genes--and we might interpret the universe very differently if our primary vantage point resided at this lower level. We might then see a world of largely independent items, drifting in and out by the luck of the draw--but with little islands dotted about here and there, where selection reins in tempo and embryology ties things together. What, then, is the different order of a world still larger than ourselves? If we missed the world of genic neutrality because we are too big, then what are we not seeing because we are too small? We are like genes in some larger world of change among species in the vastness of geological time. What are we missing in trying to read this world by the inappropriate scale of our small bodies and minuscule lifetimes?

To quote Howard T. Odum (1924-2002), the eminent American ecologist, on a similar theme: "To see these patterns which are bigger than ourselves, let us take a special view through the macroscope." Statistical explanations, and the weird and diffuse notions of causality that come with them, seem especially well suited to express in a comprehensible form what we see through this "macroscope" (and often what we see through microscopes). And increasingly, our understanding of many important phenomena, be they social network dynamics, terrorism and war, sustainability, macroeconomics, ecosystems, the world of microbes and viruses or cures for complex diseases like cancer, depend on us seeing clearly through some kind of macroscope to understand the statistical behavior of a population of potentially interacting elements.

Seeing clearly, however, depends on finding new and better ways to build our intuition about the general principles that take inherent randomness or contingency at the individual level and produce complex patterns and regularities at the macroscopic or population level. That is, to help us understand the many counter-intuitive statistical mechanisms that shape our complex world, we need better ways of connecting statistics with stories.

27 October 2010: This piece is also being featured on Nature's Soapbox Science blog.

-----

[1] Actually, even defining what we mean by "explain" is a devilishly tricky problem. Invariably, different fields of scientific research have (slightly) different definitions of what "explain" means. In some cases, a statistical explanation is sufficient, in others it must be deterministic, while in still others, even if it is derived using statistical tools, it must be rephrased in a narrative format in order to provide "intuition". I'm particularly intrigued by the difference between the way people in machine learning define a good model and the way people in the natural sciences define it. The difference appears, to my eye, to be different emphases on the importance of intuitiveness or "interpretability"; it's currently deemphasized in machine learning while the opposite is true in the natural sciences. Fortunately, a growing number of machine learners are interested in building interpretable models, and I expect great things for science to come out of this trend.

In some areas of quantitative science, "story telling" is a grave insult, leveled whenever a scientist veers too far from statistical modes of explanation ("science") toward narrative modes ("just so stories"). While sometimes a justified complaint, I think completely deemphasizing narratives can undermine scientific progress. Human intuition is currently our only way to generate truly novel ideas, hypotheses, models and principles. Until we can teach machines to generate truly novel scientific hypotheses from leaps of intuition, narratives, supported by appropriate quantitative evidence, will remain a crucial part of science.

[2] Another fascinating aspect of the interaction between these two modes of explanation is that one seems to be increasingly invading the other: narratives, at least in the media and other kinds of popular discourse, increasing ape the strong explanatory language of science. For instance, I wonder when Time Magazine started using formulaic titles for its issues like "How X happens and why it matters" and "How X affects Y", which dominate its covers today. There are a few individual writers who are amazingly good at this form of narrative, with Malcolm Gladwell being the one that leaps most readily to my mind. His writing is fundamentally in a narrative style, stories about individuals or groups or specific examples, but the language he uses is largely scientific, speaking in terms of general principles and notions of causality. I can also think of scientists who import narrative discourse into their scientific writing to great effect. Doing so well can make scientific writing less boring and less opaque, but if it becomes more important than the science itself, it can lead to "pathological science".

[3] Which is perhaps why the common belief that "everything happens for a reason" persists so strongly in popular culture.

[4] It cannot, of course, be the entire explanation. For instance, the notion among Creationists that natural selection is equivalent to "randomness" is completely false; randomness is a crucial component of way natural selection constructs complex structures (without the randomness, natural selection could not work) but the selection itself (what lives versus what dies) is highly non-random and that is what makes it such a powerful process.

What makes statistical explanations interesting is that many of the details are irrelevant, i.e., generated by randomness, but the general structure, the broad brush-strokes of the phenomena are crucially highly non-random. The chief difficulty of this mode of investigation is in correctly separating these two parts of some phenomena, and many arguments in the scientific literature can be understood as a disagreement about the particular separation being proposed. Some arguments, however, are more fundamental, being about the very notion that some phenomena are partly random rather than completely deterministic.

[5] Another source of tension on this question comes from our ambiguous understanding of the relationship between our perception and experience of free will and the observation of strong statistical regularities among groups or populations of individuals. This too is a very old question. It tormented Rev. Thomas Malthus (1766-1834), the great English demographer, in his efforts to understand how demographic statistics like birth rates could be so regular despite the highly contingent nature of any particular individual's life. Malthus's struggles later inspired Ludwig Boltzmann (1844-1906), the famous Austrian physicist, to use a statistical approach to model the behavior of gas particles in a box. (Boltzmann had previously been using a deterministic approach to model every particle individually, but found it too complicated.) This contributed to the birth of statistical physics, one of the three major branches of modern physics and arguably the branch most relevant to understanding the statistical behavior of populations of humans or genes.

posted October 27, 2010 07:15 AM in Scientifically Speaking | permalink | Comments (0)

October 05, 2010

Steven Johnson on where good ideas come from

Remember that fun cartoonist video paired with Philip Zimbardo talking about our funny relationship with time? Unsurprisingly, there are more such videos. I like this one, on Steven Johnson's new book "Where Good Ideas Come From". There's not a whole lot really revolutionary in what he says, but it's a good and thoughtful reminder that good ideas need time to incubate and they often need to be shared, borrowed or recombined (like genes, no?) in order to reach their full potential.

Of course, there's an important flip side to this sensible sounding idea, which is don't bad ideas often also incubate for a long time and often get shared, borrowed or recombined? The real question would seem to be Are there any genuine differences between where good ideas come from and where bad ideas come from? Selfishly, I'd like to think that the pressure of "publish or perish" and "fund thyself" is an example of how to encourage the production of bad ideas, but then I have to remind myself that many of the people who launched the scientific revolution in the 1600s in England, founded the Royal Society, and changed the world forever also had to work as medical doctors in order to fund their research on the side.

Tip to Nikolaus.

posted October 5, 2010 04:30 PM in Thinking Aloud | permalink | Comments (1)

January 31, 2010

Why I think the iPad is good

Since Apple announced it, I've found myself in the strange position of defending the iPad to my friends, who uniformly think it sucks. I don't think a single one of them agrees with me that the iPad is good. Most of them cite things like the name, the lack of multi-tasking, and the lack of deep customizability (i.e., programming) as reasons why it sucks. (If you want a full list of these kinds of reasons, the Huffington Post gives nine of them). I don't disagree with these complaints at all, although I'm pretty sure that some of them will be fixed later on (like the multi-tasking).

Some complaints are more thoughtful, that the iPad is a closed device (like the iPod touch / iPhone), that you can only do the things on it that Apple allows you to and that these are basically focused on consuming media (and spending money at Apple's online stores). (These points are made well by io9's review of the iPad). And, I don't disagree that this is a problem with Apple's business strategy for the iPad, and that it will limit its appeal among more serious computer users.

But, I think all of these complaints miss the point of what is good about the iPad.

The iPad is good because it will push the common experience of computing more toward how we interact with every other device / object in the world, i.e., pushing, pulling, prodding and poking them, and this is the future. (Imagine programming a computer using a visual programming language, rather than using an arcane character-based syntax we currently use; I don't know if it would be genuinely better, but I'd sure like to find out.) One thing that sucks about how current computers are designed is how baroque their interfaces are. Getting them to do even simple things requires learning complex sequences of actions using complicated indirect interfaces. By making the mode of interaction more direct, devices like the iPad (even with all of its flaws) will make many kinds of simple interactions with computing devices easier, and that's a good thing.

I think the iPad is disappointing to many techy people because they wanted it to completely replace their current laptop. They wanted a device that would do everything they can do now, but using a cool multi-touch interface. (To be honest, it's not even clear that this is possible.) But I think Apple knows that these people are not the target audience for the iPad. The people who will buy and love the iPad are your parents and your children. These are people who primarily want a casual computing device (for things like online shopping, reading the news and gossip sites, listening to music, watching tv/movies, reading email, etc.), who don't care too much about hacking their computers, and who don't mind playing inside Apple's closed world (which more and more of us do anyway; think iTunes).

If things go the way I think they will, in 20 years, the kids I'll be teaching at CU Boulder will have had their first experience with computers on something like an iPad, and they're going to expect all "real" computers to be as physically intuitive as it is. They're going to hate keyboards and mice (which will go the way of standard transmissions in cars), and they're going to think current laptops are "clunky". They'll also know that serious computing activities require a serious computer (something more customizable and programmable than an iPad). But most people don't do or care about serious computing activities, and I think Apple knows this.

So, I think most of the criticism of the iPad is sour grapes (by techy people who misunderstand how Apple is targeting with the iPad and, more fundamentally, what Apple has done to the future of human-computer interaction, which is going to be dominated by multi-touch interfaces like the iPad's). I hope the iPad is successful because I want interacting with computers to suck less. Of course, I also want it to run multiple apps, have a camera for video-conferencing, use open standards and file formats, do handwriting recognition, and generally replace my laptop. These things will come, I think, but to become real, they need a device like the iPad to call home.

posted January 31, 2010 12:56 PM in Thinking Aloud | permalink | Comments (4)

August 21, 2007

Sleight of mind

There's a nice article in the NYTimes right now that ostensibly discusses the science of magic, or rather, the science of consciousness and how magicians can engineer false assumptions through their understanding of it. Some of the usual suspects make appearances, including Teller (of Penn and Teller), Daniel Dennett (that rascally Tufts philosopher who has been in the news much of late over his support of atheism and criticism of religion) and Irene Pepperberg, whose African parrot Alex has graced this blog before (here and here). Interestingly, the article points out a potentially distant forerunner of Alex named Clever Hans, a horse who learned not arithmetic, but his trainer's unconscious suggestions about what the right answers were (which sounds like pretty intelligent behavior to me, honestly). Another of the usual suspects is the wonderful video that, with the proper instruction to viewers, conceals a person in a gorilla suit walking across the screen.

The article is a pleasant and short read, but what surprised me the most is that philosophers are, apparently, still arguing over whether consciousness is a purely physical phenomenon or does it have some additional immaterial component, called "qualia". Dennett, naturally, has the best line about this.

One evening out on the Strip, I spotted Daniel Dennett, the Tufts University philosopher, hurrying along the sidewalk across from the Mirage, which has its own tropical rain forest and volcano. The marquees were flashing and the air-conditioners roaring — Las Vegas stomping its carbon footprint with jackboots in the Nevada sand. I asked him if he was enjoying the qualia. “You really know how to hurt a guy,” he replied.

For years Dr. Dennett has argued that qualia, in the airy way they have been defined in philosophy, are illusory. In his book “Consciousness Explained,” he posed a thought experiment involving a wine-tasting machine. Pour a sample into the funnel and an array of electronic sensors would analyze the chemical content, refer to a database and finally type out its conclusion: “a flamboyant and velvety Pinot, though lacking in stamina.”

If the hardware and software could be made sophisticated enough, there would be no functional difference, Dr. Dennett suggested, between a human oenophile and the machine. So where inside the circuitry are the ineffable qualia?

This argument is just a slightly different version of the well-worn Chinese room thought experiment proposed by John Searle. Searle's goal was to undermine the idea that the wine-tasting machine was actually equivalent to an oenophile (so-called "strong" artificial intelligence), but I think his argument actually shows that the whole notion of "intelligence" is highly problematic. In other words, one could argue that the wine-tasting machine as a whole (just like a human being as a whole) is "intelligent", but the distinction between intelligence and non-intelligences becomes less and less clear as one considers poorer and poorer versions of the machine, e.g., if we start mucking around with its internal program, so that it makes mistakes with some regularity. The root of this debate, which I think has been well-understood by critics of artificial intelligence for many years, is that humans are inherently egotistical beings, and we like feeling that we are special in some way that other beings (e.g., a horse or a parrot) are not. So, when pressed to define intelligence scientifically, we continue to move the goal posts to make sure that humans are always a little more special than everything else, animal or machine.

In the end, I have to side with Alan Turing, who basically said that intelligence is as intelligences does. I'm perfectly happy to dole out the term "intelligence" to all manner of things or creatures to various degrees. In fact, I'm pretty sure that we'll eventually (assuming that we don't kill ourselves off as a species, in the meantime) construct an artificial intelligence that is, for all intents and purposes, more intelligent than a human, if only because it won't have the enumerable quirks and idiosyncrasies (e.g., optical illusions and humans' difficulty in predicting what will make us the happiest) in human intelligence that are there because we are evolved beings rather than designed beings.

posted August 21, 2007 09:52 AM in Thinking Aloud | permalink | Comments (22)

March 07, 2007

Making virtual worlds grow up

On February 20th, SFI cosponsored a business network topical meeting on "Synthetic Environments and Enterprise", or "Collective Intelligence in Synthetic Environments" in Santa Clara. Although these are fancy names, the idea is pretty simple. Online virtual worlds are pretty complex environments now, and several have millions of users who spend an average of 20-25 hours of time per week exploring, building, or otherwise inhabiting these places. My long-time friend Nick Yee has made a career out of studying the strange psychological effects and social behaviors stimulated by these virtual environments. For many businesses, it is only just now dawning on them that games have something that could help enterprise, namely, that many games are fun, while much work is boring. This workshop was designed around exploring a single question: How can we use the interesting aspects of games to make work more interesting, engaging, productive, and otherwise less boring?

Leighton Read (who sits on SFI's board of trustees) was the general ringmaster for the day, and gave, I think, a persuasive pitch for how well-designed incentive structures can be used to produce useful stuff for businesses [1]. Thankfully, it seems that people interested in adapting game-like environments to other domains are realizing that military applications [2] are pretty limited. Some recent clever examples of games that produce something useful are the ESP game, in which you try to guess the text tags (a la flickr) that another player will give to a photo you both see; the Korean search giant Naver, in which you write answers to search queries and are scored on how much people like your result; and, Dance Dance Revolution, where you compete in virtual dance competitions by actually exercising. What these games have in common is that they break down the usual button-mashing paradigm by creating social or physical incentives for achievement.

One of the main themes of the workshop was exactly this kind of strategic incentive structuring [3], along with the dangling question of, How can we design useful incentive structures to facilitate hard work? In the context of games themselves (video or otherwise), this is a bit like asking, What makes a game interesting enough to spend time playing? A few possibilities are a escapism / being someone else / a compelling story line (a la movies and books), a competitive aspect (as in card games), beautiful imagery (3d worlds), reflex and precision training (shooters and jumpers), socialization (most MMOs), or outsmarting a computer (most games from the 80s and 90s when AI was simplistic), and even creating something unique / of value (like crafting for a virtual economy). MMOs have many of these aspects, and perhaps that's what makes them so widely appealing - that is, it's not that MMOs manage to get any one thing right about interesting incentive structures [5], but rather they have something for everyone.

Second Life (SL), a MMO in which all its content is user-created (or, increasingly, business-built), got a lot of lip-service at the workshop as being a panacea for enterprise and gaming [6]. I don't believe this hype for a moment; Second Life was designed to allow user-created objects, but not to be a platform for complex, large-scale, or high-bandwidth interactions. Yet, businesses (apparently) want to use SL as a way to interact with their clients and customers, a platform for teleconferencing, broadcasting, advertising, etc., and a virtual training ground for employees Sure, all of these things possible under the SL Life system, but none of them can work particularly well [7] because SL wasn't designed to be good at facilitating them. At this point, SL is just a fad, and in my mind, there are only two things that SL does better than other, more mature technologies (like instant messaging, webcams, email, voice-over-IP, etc.). The first is to make it possible for account executives to interact with their customers in a more impromptu fashion - when they log into the virtual world, they can get pounced on by needy customers that previously would have had to go through layers of bureaucracy to get immediate attention. Of course, this kind of accessibility will disappear when there are hundreds or thousands of potential pouncers. The second is that it allows businesses to bring together people with common passions in a place that they can interact over them [8].

Neither of these things is particularly novel. The Web was the original way to bring like-minded individuals together over user-created content, and the Web 2.0 phenomenon allows more people to do this, in a more meaningful way than I think Second Life ever will. What the virtual-world aspect gives to this kind of collective organization is a more intuitive feeling of identification with a place and a group. That is, it takes a small mental flip to think of a username and a series of text statements as being an intentional, thinking person, whereas our monkey brains find it easier to think of a polygonal avatar with arms and legs as being a person. In my mind, this is the only reason to prefer virtual-world mediated interactions over other forms of online interaction, and at this point, aside from entertainment and novelty, those other mediums are much more compelling than the virtual worlds.

-----

[1] There's apparently even an annual conference dedicated to exploring these connections.

[2] Probably the best known (and reviled) example of this is America's Army, which is a glorified recruiting tool for the United States Army, complete with all the subtle propoganda you'd expect from such a thing: the US is the good guys, only the bad guys do bad things like torture, and military force is always the best solution. Of course, non-government-sponsored games aren't typically much better.

[3] I worry, however, that the emphasis on social incentives will backfire on many of these enterprise-oriented endeavors. That is, I've invested a lot of time and energy in building and maintaining my local social network, and I'd be pretty upset if a business tried to co-opt those resources for marketing or other purposes [4].

[4] In poking around online, I discovered the blog EmergenceMarketing that focuses on precisely this kind of issue within the marketing community. I suspect that what's causing the pressure on the marketing community is not just increasing competition for people's limited attention (the information deluge, as I like to call it), but also the increasing ease by which people can organize and communicate on issues related to overtly selfish corporate practices; it's not pleasant to be treated like a rock from which money is to be squeezed.

[5] The fact that populations migrate from game to game (especially when a new one is released) suggests that most MMOs are far from perfect on many of these things, and that the novelty of a new system is enough to break the loyalty of many players to their investment in the old system.

[6] The hype around Second Life is huge enough to produce parodies, and to obscure some significant problems with the system's infrastructure, design, scalability, etc. For instance, see Daren Barefoot's commentary on the Second Life hype.

[7] The best example of this was the attempt to telecast Tom Malone's talk (complete with powerpower slides) from MIT into the Cisco Second Life amphitheater and the Cisco conference room I was sitting in. The sound was about 20 seconds delayed, the slides out of sync, and the talk generally reduced to a mechanical reading of prepared remarks, for both worlds. Why was this technology used instead of another, better adapted technology like a webcam? Multicast is a much better adapted technology for this kind of situation, and gets used for some extremely popular online events. In Second Life, the Cisco amphitheater could only host about a hundred or so users; multicast can reach tens of thousands.

[8] The example of this that I liked was Pontiac. Apparently, they first considered building a virtual version of their HQ in SL, but some brilliant person encouraged them instead to build a small showroom on a large SL island, and then let users create little pavilions nearby oriented around their passion for cars (not Pontiacs, just cars in general). The result is a large community of car enthusiasts who interact and hang out around the Pontiac compound. In a sense, this is just the kind of thing the Web has been facilitating for years, except that now there's a 3d component to the virtual community that many web sites have fostered. So, punchline: what gets businesses excited about SL is the thing that (eventually) got them excited about the Web; SL is the Web writ 3d, but without its inherent scalability, flexibility, decentralization, etc.

posted March 7, 2007 01:43 PM in Thinking Aloud | permalink | Comments (0)

November 25, 2006

Unreasonable effectiveness (part 3)

A little more than twenty years after Hamming's essay, the computer scientist Bernard Chazelle penned an essay on the importance of the algorithm, in which he offers his own perspective on the unreasonable effectiveness of mathematics.

Mathematics shines in domains replete with symmetry, regularity, periodicity -- things often missing in the life and social sciences. Contrast a crystal structure (grist for algebra's mill) with the World Wide Web (cannon fodder for algorithms). No math formula will ever model whole biological organisms, economies, ecologies, or large, live networks.

Perhaps this, in fact, is what Hamming meant by saying that much of physics is logically deducible, that the symmetries, regularities, and periodicities of physical nature constrain it in such strong ways that mathematics alone (and not something more powerful) can accurately capture its structure. But, complex systems like organisms, economies and engineered systems don't have to, and certainly don't seem to, respect those constraints. Yet, even these things exhibit patterns and regularities that we can study.

Clearly, my perspective matches Chazelle's, that algorithms offer a better path toward understanding complexity than the mathematics of physics. Or, to put it another way, that complexity is inherently algorithmic. As an example of this kind of inherent complexity through algorithms, Chazelle cites Craig Reynolds' boids model. Boids is one of the canonical simulations of "artificial life"; in this particular simulation, a trio of simple algorithmic rules produce surprisingly realistic flocking / herding behavior when followed by a group of "autonomous" agents [1]. There are several other surprisingly effective algorithmic models of complex behavior (as I mentioned before, cellular automata are perhaps the most successful), but they all exist in isolation, as models of disconnected phenomenon.

So, I think one of the grand challenges for a science of complexity will be to develop a way to collect the results of these isolated models into a coherent framework. Just as we have powerful tools for working with a wide range of differential-equation models, we need similar tools for working with competitive agent-based models, evolutionary models, etc. That is, we would like to be able to write down the model in an abstract form, and then draw strong, testable conclusions about it, without simulating it. For example, imagine being able to write down Reynolds' three boids rules and deriving the observed flocking behavior before coding them up [2]. To me, that would prove that the algorithm is unreasonably effective at capturing complexity. Until then, it's just a dream.

Note: See also part 1 and part 2 of this series of posts.

[1] This citation is particularly amusing to me considering that most computer scientists seem to be completely unaware of the fields of complex systems and artificial life. This is, perhaps, attributable to computer science's roots in engineering and logic, rather than in studying the natural world.

[2] It's true that problems of intractability (P vs NP) and undecidability lurk behind these questions, but analogous questions lurk behind much of mathematics (Thank you, Godel). For most practical situations, mathematics has sidestepped these questions. For most practical situations (where here I'm thinking more of modeling the natural world), can we also sidestep them for algorithms?

posted November 25, 2006 01:19 PM in Things to Read | permalink | Comments (0)

November 24, 2006

Unreasonable effectiveness (part 2)

In keeping with the theme [1], twenty years after Wigner's essay on The Unreasonable Effectiveness of Mathematics in the Natural Sciences, Richard Hamming (who has graced this blog previously) wrote a piece by the same name for The American Mathematical Monthly (87 (2), 1980). Hamming takes issue with Wigner's essay, suggesting that the physicist has dodged the central question of why mathematics has been so effective. In Hamming's piece, he offers a few new thoughts on the matter: primarily, he suggests, mathematics has been successful in physics because much of it is logically deducible, and that we often change mathematics (i.e., we change our assumptions or our framework) to fit the reality we wish to describe. His conclusion, however, puts the matter best.

From all of this I am forced to conclude both that mathematics is unreasonably effective and that all of the explanations I have given when added together simply are not enough to explain what I set out to account for. I think that we -- meaning you, mainly -- must continue to try to explain why the logical side of science -- meaning mathematics, mainly -- is the proper tool for exploring the universe as we perceive it at present. I suspect that my explanations are hardly as good as those of the early Greeks, who said for the material side of the question that the nature of the universe is earth, fire, water, and air. The logical side of the nature of the universe requires further exploration.

Hamming, it seems, has dodged the question as well. But, Hamming's point that we have changed mathematics to suit our needs is important. Let's return to the idea that computer science and the algorithm offer a path toward capturing the regularity of complex systems, e.g., social and biological ones. Historically, we've demanded that algorithms yield guarantees on their results, and that they don't take too long to return them. For example, we want to know that our sorting algorithm will actually sort a list of numbers, and that it will do it in the time I allow. Essentially, our formalisms and methods of analysis in computer science have been driven by engineering needs, and our entire field reflects that bias.

But, if we want to use algorithms to accurately model complex systems, it stands to reason that we should orient ourselves toward constraints that are more suitable for the kinds of behaviors those systems exhibit. In mathematics, it's relatively easy to write down an intractable system of equations; similarly, it's easy to write down an algorithm who's behavior is impossible to predict. The trick, it seems, will be to develop simple algorithmic formalisms for modeling complex systems that we can analyze and understand in much the same way that we do for mathematical equations.

I don't believe that one set of formalisms will be suitable for all complex systems, but perhaps biological systems are consistent enough that we could use one set for them, and perhaps another for social systems. For instance, biological systems are all driven by metabolic needs, and by a need to maintain structure in the face of degradation. Similarly, social systems are driven by, at least, competitive forces and asymmetries in knowledge. These are needs that things like sorting algorithms have no concept of.

Note: See also part 1 and part 3 of this series of posts.

[1] A common theme, it seems. What topic wouldn't be complete without its own wikipedia article?

posted November 24, 2006 12:21 PM in Things to Read | permalink | Comments (2)

November 23, 2006

Unreasonable effectiveness (part 1)

Einstein apparently once remarked that "The most incomprehensible thing about the universe is that it is comprehensible." In a famous paper in Pure Mathematics (13 (1), 1960), the physicist Eugene Wigner (Nobel in 1963 for atomic theory) discussed "The Unreasonable Effectiveness of Mathematics in the Natural Sciences". The essay is not too long (for an academic piece), but I think this example of the the application of mathematics gives the best taste of what Wigner is trying to point out.

The second example is that of ordinary, elementary quantum mechanics. This originated when Max Born noticed that some rules of computation, given by Heisenberg, were formally identical with the rules of computation with matrices, established a long time before by mathematicians. Born, Jordan, and Heisenberg then proposed to replace by matrices the position and momentum variables of the equations of classical mechanics. They applied the rules of matrix mechanics to a few highly idealized problems and the results were quite satisfactory.

However, there was, at that time, no rational evidence that their matrix mechanics would prove correct under more realistic conditions. Indeed, they say "if the mechanics as here proposed should already be correct in its essential traits." As a matter of fact, the first application of their mechanics to a realistic problem, that of the hydrogen atom, was given several months later, by Pauli. This application gave results in agreement with experience. This was satisfactory but still understandable because Heisenberg's rules of calculation were abstracted from problems which included the old theory of the hydrogen atom.

The miracle occurred only when matrix mechanics, or a mathematically equivalent theory, was applied to problems for which Heisenberg's calculating rules were meaningless. Heisenberg's rules presupposed that the classical equations of motion had solutions with certain periodicity properties; and the equations of motion of the two electrons of the helium atom, or of the even greater number of electrons of heavier atoms, simply do not have these properties, so that Heisenberg's rules cannot be applied to these cases.

Nevertheless, the calculation of the lowest energy level of helium, as carried out a few months ago by Kinoshita at Cornell and by Bazley at the Bureau of Standards, agrees with the experimental data within the accuracy of the observations, which is one part in ten million. Surely in this case we "got something out" of the equations that we did not put in.

As someone (apparently) involved in the construction of "a physics of complex systems", I have to wonder whether mathematics is still unreasonably effective at capturing these kind of inherent patterns in nature. Formally, the kind of mathematics that physics has historically used is equivalent to a memoryless computational machine (if there is some kind of memory, it has to be explicitly encoded into the current state); but, the algorithm is a more general form of computation that can express ideas that are significantly more complex, at least partially because it inherently utilizes history. This suggests to me that a physics of complex systems will be intimately connected to the mechanics of computation itself, and that select tools from computer science may ultimately let us express the structure and behavior of complex, e.g., social and biological, systems more effectively than the mathematics used by physics.

One difficulty in this endeavor, of course, is that the mathematics of physics is already well-developed, while the algorithms of complex systems are not. There have been some wonderful successes with algorithms already, e.g., cellular automata, but it seems to me that there's a significant amount of cultural inertia here, perhaps at least partially because there are so many more physicists than computer scientists working on complex systems.

Note: See also part 2 and part 3 of this series of posts.

posted November 23, 2006 11:31 AM in Things to Read | permalink | Comments (2)

September 08, 2006

Academic publishing, tomorrow

Imagine a world where academic publishing is handled purely by academics, rather than ruthless, greedy corporate entities. [1] Imagine a world where hiring decisions were made on the techincal merit of your work, rather than the coterie of journals associated with your c.v. Imagine a world where papers are living documents, actively discussed and modified (wikified?) by the relevant community of interested intellectuals. This, and a bit more, is the future, according to Adam Rogers, a senior associate editor at "Wired" magazine. (tip to The Geomblog)

The gist of Rogers' argument is that the Web will change academic publishing into this utopian paradise of open information. I seriously doubt things will be like he predicts, but he does raise some excellent points about how the Web is facilitating new ways of communicating technical results. For instance, he mentions a couple of on-going experiments in this area:

In other quarters, traditional peer review has already been abandoned. Physicists and mathematicians today mainly communicate via a Web site called arXiv. (The X is supposed to be the Greek letter chi; it's pronounced "archive." If you were a physicist, you'd find that hilarious.) Since 1991, arXiv has been allowing researchers to post prepublication papers for their colleagues to read. The online journal Biology Direct publishes any article for which the author can find three members of its editorial board to write reviews. (The journal also posts the reviews – author names attached.) And when PLoS ONE launches later this year, the papers on its site will have been evaluated only for technical merit – do the work right and acceptance is guaranteed.

It's a bit hasty to claim that peer review has been "abandoned", but the arxiv has certainly almost completely supplanted some journals in their role of disseminating new research [2]. This is probably most true for physicists, since they're the ones who started the arxiv; other fields, like biology, don't have a pre-print archive (that I know of), but they seem to be moving toward open access journals for the same purpose. In computer science, we already have something like this, since the primary venue for publication is in conferences (which are peer reviewed, unlike conference in just about every other discipline), and whose papers are typically picked up by CiteSeer.

It seems that a lot of people are thinking or talking about open access this week. The Chronicle of Higher Education has a piece on the momentum for greater open access journals. It's main message is the new letter, signed by 53 presidents of liberal arts colleges (including my own Haverford College) in support of the bill currently in Congress (although unlikely to pass this year) that would mandate that all federally funded research be eventually made publicly available. The comments from the publishing industry are unsurprisingly self-interested and uninspiring, but they also betray a great deal of arrogance and greed. I wholeheartedly support more open access to articles - publicly funded research should be free to the public, just like public roads are free for everyone to use.

But, the bigger question here is, Could any these various alternatives to the pay-for-access model really replace journals? I'm less sure of the future here, as journals also serve a couple of other roles that things like the arxiv were never intended to fill. That is, journals run the peer review process, which, at its best, prevents erroneous research from getting a stamp of "community approval" and thereby distracting researchers for a while as they a) figure out that it's mistaken, and b) write new papers to correct it. This is why, I think, there is a lot of crap on the arxiv. A lot of authors self-police themselves quite well, and end up submitting nearly error-free and highly competent work to journals, but the error-checking process is crucial, I think. Sure, peer review does miss a lot of errors (and frauds), but, to paraphrase Mason Porter paraphrasing Churchill on democracy, peer review is the worst form of quality control for research, except for all the others. The real point here is that until something comes along that can replace journals as being the "community approved" body of work, I doubt they'll disappear. I do hope, though, that they'll morph into more benign organizations. PNAS and PLoS are excellent role models for the future, I think. And, they also happen to publish really great research.

Another point Rogers makes about the changes the Web is encouraging is a social one.

[...] Today’s undergrads have ... never functioned without IM and Wikipedia and arXiv, and they’re going to demand different kinds of review for different kinds of papers.

It's certainly true that I conduct my research very differently because I have access to Wikipedia, arxiv, email, etc. In fact, I would say that the real change these technologies will have on the world of research will be to decentralize it a little. It's now much easier to be a productive, contributing member of a research community without being down the hall from your colleagues and collaborators than it was 20 years ago. These electronic modes of communication just make it easier for information to flow freely, and I think that ultimately has a very positive effect on research itself. Taking that role away from the journals suggests that they will become more about getting that stamp of approval, than anything else. With its increased relative importance, who knows, perhaps journals will do a better job at running the peer review process (they could certainly use the Web, etc. to do a better job at picking reviewers...).

(For some more thoughts on this, see a recent discussion of mine with Mason Porter.)

Update Sept. 9: Suresh points to a recent post of his own about the arxiv and the issue of time-stamping.

[1] Actually, computer science conferences, impressively, are a reasonable approximation to this, although they have their own fair share of issues.

[2] A side effect of the arXiv is that it presents tricky issues regarding citation, timing and proper attribution. For instance, if a research article becomes a "living" documents, proper citation becomes rather problematic. For instance, which version of an article do you cite? (Surely not all of them!) And, if you revise your article after someone posts a derivative work, are you obligated to cite it in your revision?

posted September 8, 2006 05:23 PM in Simply Academic | permalink | Comments (3)

July 26, 2006

Models, errors and the methods of science.

A recent posting on the arxiv prompts me to write down some recent musings about the differences between science and non-science.

On the Nature of Science by B.K. Jennings

A 21st century view of the nature of science is presented. It attempts to show how a consistent description of science and scientific progress can be given. Science advances through a sequence of models with progressively greater predictive power. The philosophical and metaphysical implications of the models change in unpredictable ways as the predictive power increases. The view of science arrived at is one based on instrumentalism. Philosophical realism can only be recovered by a subtle use of Occam's razor. Error control is seen to be essential to scientific progress. The nature of the difference between science and religion is explored.

Which can be summarized even more succinctly by George Box, famously saying "all models are wrong but some models are useful" with the addendum that this recognition is what makes science different from religion (or other non-scientific endeavors), and that the sorting out the useful from the useless is what drives science forward.

In addition to being a relatively succinct introduction to the basic terrain of modern philosophy of science, Jennings also describes two common critiques of science. The first is the God of the Gaps idea: basically, science explains how nature works and everything left unexplained is the domain of God. Obviously, the problem is that those gaps have a pesky tendency to disappear over time, taking that bit of God with them. For Jennings, this idea is just a special case of the more general "Proof by Lack of Imagination" critique, which is summarized as "I cannot imagine how this can happen naturally, therefore it does not, or God must have done it." As with the God of the Gaps idea, more imaginative people tend to come along (or have come along before) who can imagine how it could happen naturally (e.g., continental drift). Among physicists who like this idea, things like the precise value of fundamental constants are grist for the mill, but can we really presume that we'll never be able to explain them naturally?

Evolution is, as usual, one of the best examples of this kind of attack. For instance, almost all of the arguments currently put forth by creationists are just a rehashing of arguments made in the mid-to-late 1800s by religious scientists and officials. Indeed, Darwin's biggest critic was the politically powerful naturalist Sir Richard Owen, who objected to evolution because he preferred the idea that God used archetypical forms to derive species. The proof, of course, was in the overwhelming weight of evidence in favor of evolution, and, in the end, with Darwin being much more clever than Owen.

Being the bread and butter of science, this may seem quite droll. But I think non-scientists have a strong degree of cognitive dissonance when faced with such evidential claims. That is, what distinguishes scientists from non is our conviction that knowledge about the nature of the world is purely evidential, produced only by careful observations, models and the control of our errors. For the non-scientist, this works well enough for the knowledge required to see to the basics of life (eating, moving, etc.), but conflicts with (and often loses out to) the knowledge given to us by social authorities. In the West before Galileo, the authorities were the Church or Aristotle - today, Aristotle has been replaced by talk radio, television and cranks pretending to be scientists. I suspect that it's this conflicting relationship with knowledge that might explain several problems with the lay public's relationship with science. Let me connect this with my current reading material, to make the point more clear.

Deborah Mayo's excellent (and I fear vastly under-read) Error and the Growth of Experimental Knowledge, is a dense and extremely thorough exposition of a modern philosophy of science, based on the evidential model I described above. As she reinterprets Kuhn's analysis of Popper, she implicitly points to an explanation for why science so often classes with non-science, and why these clashes often leave scientists shaking their heads in confusion. Quoting Kuhn discussing why astrology is not a science, she says

The practitioners of astrology, Kuhn notes, "like practitioners of philosophy and some social sciences [AC: I argue also many humanities]... belonged to a variety of different schools ... [between which] the debates ordinarily revolved about the implausibility of the particular theory employed by one or another school. Failures of individual predictions played very little role." Practitioners were happy to criticize the basic commitments of competing astrological schools, Kuhn tells us; rival schools were constantly having their basic presuppositions challenged. What they lacked was that very special kind of criticism that allows genuine learning - the kind where a failed prediction can be pinned on a specific hypothesis. Their criticism was not constructive: a failure did not genuinely indicate a specific improvement, adjustment or falsification.

That is, criticism that does not focus on the evidential basis of theories is what non-sciences engage in. In Kuhn's language, this is called "critical discourse" and is what distinguishes non-science from science. In a sense, critical discourse is a form of logical jousting, in which you can only disparage the assumptions of your opponent (thus undercutting their entire theory) while championing your own. Marshaling anecdotal evidence in support of your assumptions is to pseudo-science, I think, what stereotyping is to racism.

Since critical discourse is the norm outside of science, is it any wonder that when non-scientists, attempting to resolve the cognitive dissonance between authoritative knowledge and evidential knowledge, resort to the only form of criticism they understand? This leads me to be extremely depressed about the current state of science education in this country, and about the possibility of politicians ever learning from their mistakes.

posted July 26, 2006 11:26 PM in Scientifically Speaking | permalink | Comments (1)

July 17, 2006

Uncertainty about probability

In the past few days, I've been reading about different interpretations of probability, i.e., the frequentist and bayesian approaches (for a primer, try here). This has, of course, led me back to my roots in physics since both quantum physics (QM) and statistical mechanics both rely on probabilities to describe the behavior of nature. Amusingly, I must not have been paying much attention while I was taking QM at Haverford, e.g., Neils Bohr once said "If quantum mechanics hasn't profoundly shocked you, you haven't understood it yet." and back then I was neither shocked nor confused by things like the uncertainty principle, quantum indeterminacy or Bell's Theorem. Today, however, it's a different story entirely.

John Baez has a nice summary and selection of news-group posts that discuss the idea of frequentism versus bayesianism in the context of theoretical physics. This, in turn, led me to another physicist's perspective on the matter. The late Ed Jaynes has an entire book on probability from a physics perspective, but I most enjoyed his discussion of the physics of a "random experiment", in which he notes that quantum physics differs sharply in its use of probabilities from macroscopic sciences like biology. I'll just quote Jaynes on this point, since he describes it so eloquently:

In biology or medicine, if we note that an effect E (for example, muscle contraction) does not occur unless a condition C (nerve impulse) is present, it seems natural to infer that C is a necessary causative agent of E... But suppose that condition C does not always lead to effect E; what further inferences should a scientist draw? At this point the reasoning formats of biology and quantum theory diverge sharply.

... Consider, for example, the photoelectric effect (we shine a light on a metal surface and find that electrons are ejected from it). The experimental fact is that the electrons do not appear unless light is present. So light must be a causative factor. But light does not always produce ejected electrons... Why then do we not draw the obvious inference, that in addition to the light there must be a second causative factor...?

... What is done in quantum theory is just the opposite; when no cause is apparent, one simple postulates that no cause exists; ergo, the laws of physics are indeterministic and can be expressed only in probability form.

... In classical statistical mechanics, probability distributions represent our ignorance of the true microscopic coordinates - ignorance that was avoidable in principle but unavoidable in practice, but which did not prevent us from predicting reproducible phenomena, just because those phenomena are independent of the microscopic details.

In current quantum theory, probabilities express the ignorance due to our failure to search for the real causes of physical phenomena. This may be unavoidable in practice, but in our present state of knowledge we do not know whether it is unavoidable in principle.

Jaynes goes on to describe how current quantum physics may simply be in a rough patch where our experimental methods are simply too inadequate to appropriately isolate the physical causes of the apparent indeterministic behavior of our physical systems. But, I don't quite understand how this idea could square with the refutations of such a hidden variable theory after Bell's Theorem basically laid local realism to rest. It seems to me that Jaynes and Baez, in fact, evoke similar interpretations of all probabilities, i.e., that they only represent our (human) model of our (human) ignorance, which can be about either the initial conditions of the system in question, the causative rules that cause it to evolve in certain ways, or both.

It would be unfair to those statistical physicists who work in the field of complex networks to say that they share the same assumptions of no-causal-factor that their quantum physics colleagues may accept. In statistical physics, as Jaynes points out, the reliance on statistical methodology is forced on statistical physicists by our measurement limitations. Similarly, in complex networks, it's impractical to know the entire developmental history of the Internet, the evolutionary history of every species in a foodweb, etc. But unlike statistical physics, in which experiments are highly repeatable, every complex network has a high degree of uniqueness, and are thus more like biological and climatological systems where there is only one instance to study. To make matters even worse, complex networks are also quite small, typically having between 10^2 and 10^6 parts; in contrast, most systems that concern statistical physics have 10^22 or more parts. In these, it's probably not terribly wrong to use a frequentist perspective and assume that their relative frequencies behave like probabilities. But when you only have a few thousand or million parts, such claims seems less tenable since it's hard to argue that you're close to asymptotic behavior in this case. Bayesianism, being more capable of dealing with data-poor situations in which many alternative hypotheses are plausible, seems to offer the right way to deal with such problems. But, perhaps owing to the history of the field, few people in network science seem to use it.

For my own part, I find myself being slowly seduced by their siren call of mathematical rigor and the notion of principled approaches to these complicated problems. Yet, there are three things about the bayesian approach that make me a little uncomfortable. First, given that with enough data, it doesn't matter what your original assumption about the likelihood of any outcome is (i.e., your "prior"), shouldn't bayesian and frequentist arguments lead to the same inferences in a limiting, or simply very large, set of identical experiments? If this is right, then it seems more reasonable that statistical physicists have been using frequentist approaches for years with great success. Second, in the case where we are far from the limiting set of experiments, doesn't being able to choose an arbitrary prior amount to a kind of scientific relativism? Perhaps this is wrong because the manner in which you update your prior, given new evidence, is what distinguishes it from certain crackpot theories.

Finally, choosing an initial prior seems highly arbitrary, since one can always recurse a level and ask what prior on priors you might take. Here, I like the ideas of a uniform prior, i.e., I think everything is equally plausible, and of using the principle of maximum entropy (MaxEnt; also called the principle of indifference, by Laplace). Entropy is a nice way to connect this approach with certain biases in physics, and may say something very deep about the behavior of our incomplete description of nature at the quantum level. But, it's not entirely clear to me (or, apparently, others: see here and here) how to use maximum entropy in the context of previous knowledge constraining our estimates of the future. Indeed, one of the main things I still don't understand is how, if we model the absorption of knowledge as a sequential process, to update our understanding of the world in a rigorous way while guaranteeing that the order we see the data doesn't matter.

Update July 17: Cosma points out that Jaynes's Bayesian formulation of statistical mechanics leads to unphysical implications like a backwards arrow of time. Although it's comforting to know that statistical mechanics cannot be reduced to mere Bayesian crank-turning, it doesn't resolve my confusion about just what it means that the quantum state of matter is best expressed probabilistically! His article also reminds me that there are good empirical reasons to use a frequentist approach, reasons based on Mayo's arguments and which should be familiar to any scientist who has actually worked with data in the lab. Interested readers should refer to Cosma's review of Mayo's Error, in which he summarizes her critique of Bayesianism.

posted July 17, 2006 03:30 PM in Scientifically Speaking | permalink | Comments (0)

March 20, 2006

It's a Monty Hall universe, after all

Attention conservation notice: This mini-essay was written after one of my family members asked me what I thought of the idea of predestination. What follows is a rephrasing of my response, along with a few additional thoughts that connect this topic to evolutionary game theory.